Data is being generated constantly in our digital age nowadays, from user clicks, social media posts, sensor readings, financial transactions, and much more. The amount of data we are creating is both an opportunity and a challenge in the digital economy. Raw and messy data is typically useless, but once AI data processing is introduced, data can become an impetus to innovation.

The basic premise is simple: AI and machine learning techniques systematically convert the unstructured data into a final, handy, valuable information transformation process. This transformation process is necessary for all applications, from complex AI training models to decision-making processes for strategic business plans. It is an investment in efficiency, agility, and competitive advantage.

Table of Contents

What Is AI Data Processing?

AI data processing refers to the deliberate use of artificial intelligence or machine learning algorithms to automate and improve the data lifecycle. Unlike traditional data processing, which often relies on static, rule-based systems, AI learns from the data processing AI handles, adapts to new formats, and finds patterns that would take a human numerous attempts to find, or they simply wouldn’t notice at all.

It’s like taking a huge pile of unrefined rocks and turning it into a neat and precise collection of polished, labelled, and sorted gems. We want to eliminate manual processes and begin to use AI for data processing that provides the quality, speed, and scalability that AI, being used effectively, will provide.

Why AI Data Processing Matters

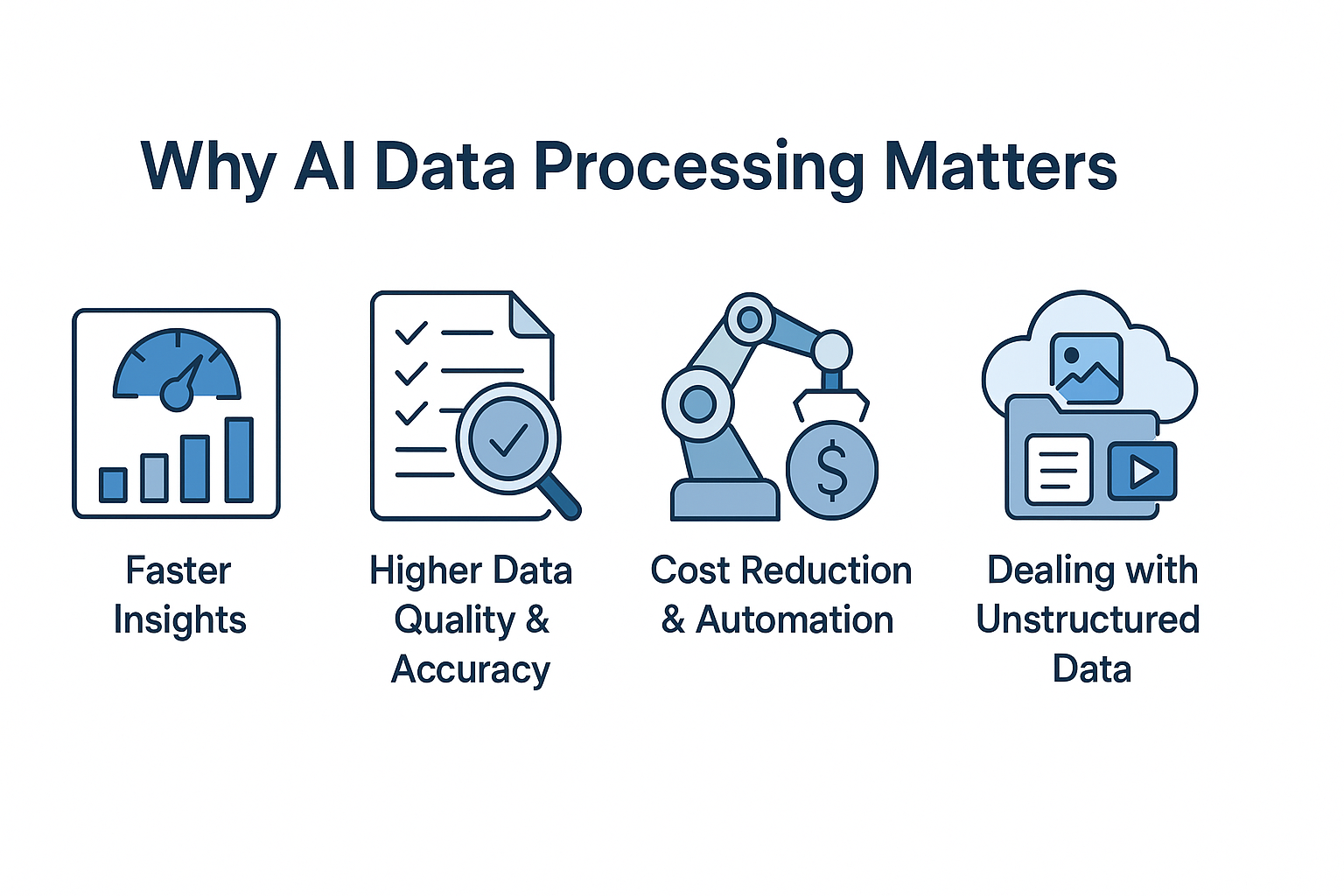

The sheer quantity and variety of contemporary data have now surpassed human capabilities. Data processing AI is no longer just a “nice to have”; it is a requirement, and it is a requirement now for every organization that wants to be competitive.

- Faster Insights: AI systems can process and analyze enormous amounts of data vastly quicker than humans, and it works around the clock without fatigue. This means that AI compresses and speeds the process from raw data to insights, facilitating quicker decision-making in highly dynamic environments.

- Higher Data Quality and More Accurate: AI enables automatic detection and even correction of errors, inconsistencies, and missing values, all fundamental processes to build reliable machine learning models.

- Cost Reduction and Automation: AI is able to take on long-winded, complex and often monotonous tasks; starting with the cleaning of the data until interpreting and getting the final insights which results in significant savings of operational costs and therefore, frees up people to work in high value, more creative tasks.

- Dealing with Unstructured Data: Most of the valuable data today comes in unstructured formats such as images, video, text (e.g. customer reviews and legal documents), and audio. Data bases and tools designed around AI are optimized to process and analyze these types of data sets to be applied to tasks such as, natural language processing (NLP) and computer vision.

Data Collection: Gathering Raw Information

The first essential step is to collect the unrefined data. Data can come from anywhere, including customer databases, IoT sensors, financial reports, or public websites! For example, one example of a business could be scraping publicly available demographic data through a LinkedIn Profile Scraper or using a LinkedIn Company Scraper to monitor trends across industry and company pages related to a business.

The data collected in this way is a “raw stream.” It is often different in structure, dissimilar in data, and extremely heterogeneous. Several tools can be used to even utilize data in either incremental streams or by creating a method of requesting and ingesting data in bulk from either source.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

Data Cleaning and Preprocessing for AI Models

If you put garbage into an artificial intelligence (AI) system, you will get garbage out. Undoubtedly, this step compared to the others is critical to the success of any AI-related project. AI-based models are hypersensitive to inconsistencies, therefore, the data must be properly prepped. There are many steps to properly prepped data, and with the recent introduction of advanced AI models, many of these steps are now as simple as pushing a button:

- Missing Values: Holistic AI algorithms can detect and estimate missing data points along parameters of the complete record data as compared to just dropping the incomplete record.

- Noise/Outlier: An AI-based model can automatically highlight records with outliers that fall outside a comparative expected parameter, and clean an entire data set of irrelevant “noise” and duplicate records, leading to greater output data quality.

- Transformation and Standardization of Data: Data collected may all be coming from different sources but the data sometimes is used in a different format or unit – an AI-based model and/or automate the transformation into one standard format – such as transforming values in a data set into a normalized numerical range.

Read More: How to Use a Web Scraper Chrome Extension?

Feature Extraction: Turning Data Into Insights

Once the data have been cleaned, it has to be organized into “features,” which are the relevant, measurable characteristics from which an AI model will learn. For example, raw customer purchase history data may be transformed into features, such as “average monthly spend,” “recency of last purchase,” and “total number of different categories of products purchased.”

Feature engineering, sometimes with the assistance of machine learning, selects or combines raw data columns into new variables with greater predictive capacity. This is where value is really starting to be revealed for the downstream AI application.

Read more: Which AI is best for resume writing?

Training and Testing: How Machines Learn from Data

When the data is cleaned and prepared, it is then used to train an artificial intelligence (AI) model, usually by being input into a machine learning algorithm that will iteratively adjust its internal features until optimal patterns are found. The model learns to associate input features with input data either as a prediction of a dependant variable (e.g. a future observation), for classifications, or without proposing a dependent variable and simply finds groupings.

The dataset of input features is normally divided into the following:

- Training Set: Data that is used to train the model.

- Validation Set: Data that is used to optimize the model structure.

- Testing Set: Data that has never been seen before by the model, which is used to test the performance of the model in a “real-world” evaluation.

A well-performing model must demonstrate that it can generalize its learned information about a dataset provides extrapolated observations on never seen data.

This process is often repeated during the training, patient, and evaluating aspects of the AI data processing cycle at which time the model can be improved.

The Power of Real-Time AI Data Processing

While the majority of the conversation has focused on batch processing, the true innovation of modern business is processing data streams in-flight. Real-time artificial intelligence and event processing are essential to any application requiring an immediate response, such as fraud detection, self-driving vehicles, or stock trading.

Real-time AI data processing technology relies on low-latency stream processing platforms to ingest and analyze data as the data is produced. This means that the AI is able to either provide predictive insights as data streams by or trigger automated actions and workflows in real-time.

For example, if a new job is posted (perhaps using a how to scrape linkedin jobs script) it could be categorized, the salary range estimated, and matched to other candidates within milliseconds. This includes simple and straightforward scripts; the same scripts you may write learning how to scrape LinkedIn data using Python, to high-level enterprise-grade data integration platforms.

LinkedIn Company Scraper - Company Data

Discover everything you need to know about LinkedIn Company Scraper , including its features, benefits, and the various options available to streamline data extraction for your business needs.

Choosing the Best Software for Real-Time AI Data Processing

Choosing the right tools is essential in creating a data pipeline that scales effectively and is efficient. Organizations require strong, fault-tolerant solutions to handle the velocity or volumes of data being streamed to them.

If you are thinking about the best software for real-time ai data processing, it is recommended that you find solutions that enable:

- Unified Data and AI Platforms: Tools such as Databricks, Snowflake, or others providing servoces that enable you to do data engineering, analytics, and machine learning all within the same environment, can simplify the workflow process.

- Event Streaming: Software solutions, such as Apache Kafka, that enable high-throughput, real-time streaming of data is essential for integration and enable data to be consumed as a continuous stream of events.

- Automated Feature Engineering: Wherever possible, seek tools to help automatically build or suggest features, as this is one of the most efficient areas of preprocessing and ranks high in easing the previously mentioned manual efforts.

Real-World Applications of AI Data Processing

There are endless use cases for the transition from raw data to insight in every industry.

- Personalized Marketing: Retailers are using AI data processing to understand customer behavior from online purchase data, and then recognize trends and patterns to create recommendations and targeted advertising campaigns for products they may like.

- Predictive Maintenance: In manufacturing there are sensors on all machinery that are watching every second that you are making the product and creating endless streams of data. This is relevant because AI data processing is utilized and will drastically reduce and predict equipment failures and maintenance before it happens, thus reducing downtime and saving companies and manufacturers millions of dollars.

- Financial Services: Banks use AI to analyze transactional data in real-time to flag fraudulent transactions, gaining an analysis by using both anomalous activities and high-risk behavioral patterns and scores.

- HR and Recruiting: Businesses can utilize a LinkedIn scraping api or create their own profiles and tags of market data regarding job roles, skills, and salaries to develop a strategy relating to hiring and compensation packages.

Conclusion: AI for Data Processing

The process of taking data that is raw and chaotic to then converting it to specific, clear, and actionable insight is at the heart of contemporary business intelligence today. AI data processing represents the powerful momentum of business intelligence itself, as it automates some of the more complex and time-consuming parts of cleaning, transforming, and analyzing the information.

With AI data processing, business care management, and, more importantly, using their data to move faster, lower costs, and make decisions that will help them stand apart from the competition.

FAQs for AI data processing

What is the main difference between traditional data processing and AI data processing?

The primary distinction is in automation and adaptability. Traditional data processing methods rely on predefined, rigid rules. When the data format changes, the process fails. AI for data processing uses machine learning models to learn from the data itself. It can automatically detect and correct errors, adapt to different types of data (e.g. unstructured text, images), and identify subtle patterns that human programmers did not specifically direct it to find.

How does AI improve data quality?

AI improves data quality in two significant ways: imputation and anomaly detection. Imputation leverages statistical and machine learning models to accurately estimate and “fill-in” missing data based on surrounding data trends. Anomaly detection automatically flags or corrects outliers or inconsistencies in data preventing low-quality data from ruining the training of machine learning models.

Is AI data processing instantaneous every time?

Not necessarily. Data processing is usually seen as either batch processing (work with broadly sized blocks of historical data with many reporting needs, and typically done in daily or weekly intervals) or real-time processing (instant updates of data streams as they arrive, with little to no delay). In real-time processing, data is processed without delay; this can be very important in applications that respond immediately, such as in fraud alerts or systems requiring autonomous navigation, and may require specialized platforms, such as those used in real-time artificial intelligence systems and event processing.