Previously, building your pipeline relied on having a list of contacts (names + phone numbers), etc. But today, if you want to succeed in B2B Sales, you need to develop more advanced knowledge about the prospects themselves, along with what they really desire or are looking for, and when they will be ready to buy. This progression in the way sales teams obtain their information has placed the linkedin crawler at the center of today’s modern sales intelligence stack. With the ability to automatically collect professional data, this tool has allowed organizations to evolve from maintaining a collection of static source lists — and leverage dynamic, actionable insights.

Table of Contents

The Role of LinkedIn Data in Today’s Sales Intelligence Stack

Previously and, still true today, sales intelligence isn’t simply about contact information, anymore, it is about getting to know the professional ecosystem. A huge part of that professional ecosystem is found on linkedin, which happens to have the most comprehensive collection of professional identities, company structures and industry changes available to the public, on earth and in real-time. Every time a company is using old static lead lists, they are engaging with data that is several months or years out of date – people change jobs, companies pivot, job titles evolve and everything evolves daily.

integrating linkedin into your sales stack provides you with a “living” view of your target market. sales development representatives (sdr’s) have the opportunity to reach out to their target market by developing personalized outreach based on recent promotions, shared connections and specialized skills mentioned on linkedin profiles.

In addition to the ability of SDR’s to personalize outreach, leadership also has the ability to identify emerging markets and competitor movements, before they become apparent and take action accordingly. without a reliable means of harnessing LinkedIn data, sales teams will be flying blind and relying on guessworks instead of evidence-based sales strategies.

LinkedIn Company Scraper - Company Data

Discover everything you need to know about LinkedIn Company Scraper , including its features, benefits, and the various options available to streamline data extraction for your business needs.

What Is a LinkedIn Crawler and How Does It Work?

LinkedIn crawlers can be described as a specialized tool for retrieving information on the LinkedIn website. Crawlers are more sophisticated than simple scrapers. Instead of simply retrieving one page of a user profile’s text, crawlers can follow links to multiple pages and navigate through paginated search results. Also, they can understand the interrelationships between different types of information. For example, crawlers can understand how a user profile is linked to a company profile and vice versa.

Crawlers use complex programming scripts and are generally developed using languages like Python. The language provides developers with powerful libraries to create scripts to interface with web sites and interpret the HTML returned by website servers. Crawlers “emulate” the behavior of a user visiting a website, including scrolling and clicking through the pages to find the information associated with a user profile or company profile, as part of the automated crawling process.

After retrieving the unstructured crawled data from a website, the crawler then converts the crawled information into a structure or format that an analyst can readily read. Examples of electronic structured formats are the electricity-driven CSV file and the JSON format. After converting the data, crawlers can provide to an analyst their structured crawled data in either CSV or JSON format and assist with the harmonization and integration of their data to other automation engines and software applications, including Customer Relationship Management systems.

From Profiles to Pipelines: Turning LinkedIn Data into Revenue Signals

Using a LinkedIn crawler, you can generate revenue signals based on the information contained in individual profiles. A “revenue signal” usually contains information related to a potential customer. For example, if a crawler was able to find that an organization recently added five new executives to its DevOps team, it would indicate to any third party that this organization is investing in its technical capabilities and possibly looking for new products to purchase.

By consistently monitoring these profiles, organizations can create automated workflows for their sales teams. For instance, if a high-value target moves from “manager” to “director,” you can automatically notify sales representatives to send a note congratulating the individual on their new promotion and reopening the sales conversation. This level of responsiveness is not something that most people can achieve manually, let alone at scale. However, it is an expectation for high-performing sales organizations.

Automating Lead Discovery with Intelligent LinkedIn Crawling

The process of finding new leads can be time-consuming for sales teams as it takes up to 20% of their week. By using intelligent crawl technology, the process becomes automated with the use of specific filters. For example, a crawler can be set up to find every “Head of Sales” at a “Fintech” company based in New York with less than six months’ tenure in the role, thereby eliminating the need for an individual salesperson to search through numerous search engine results.

Furthermore, because a crawler operates continuously, it will consistently provide sales teams with new leads that could not be matched by any single person’s effort. As a result, salespeople can focus on building customer relationships and making sales instead of searching for lead information in a digital pile of hay.

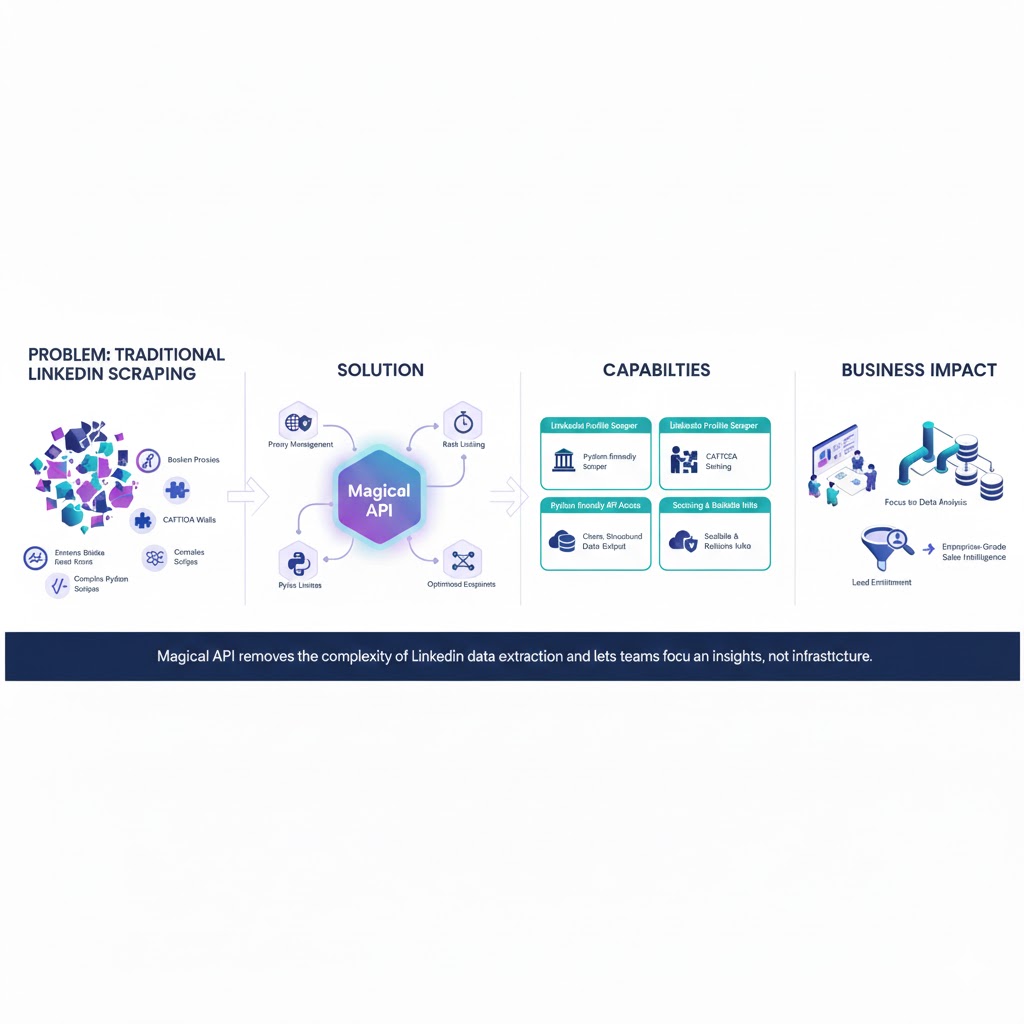

Leveraging Magical API for Seamless Data Access

In today’s world, there are many ways to create a linkedin crawler python script to facilitate your scraping needs; however, most businesses would prefer to rely on an external provider, which can handle the wide variety of issues associated with linkedin scraping API. This is where Magical APIs prove their worth as partners for all aspects of sales operations.

Magical APIs offer a complete package of endpoints that have been optimized to eliminate common pain-points associated with traditional web scraping, including proxy management, rate limiting and the complexity associated with captcha solving.

Using Magical APIs allows you to use a powerful linkedin profile scraper and linkedin company scraper without needing to worry about the underlying technical aspects associated with this extraction process. Now that your team doesn’t need to focus their resources managing the technical elements, they can spend their time analyzing the actual data.

Whether you are looking to enrich your current lead list, build a totally new database from the ground up or anything in-between, you can depend on Magical APIs to deliver the reliability and scalability required for enterprise-grade sales intelligence. Additionally, Magical APIS provides a simple, easy to understand framework for How to scrape linkedin data using Python, giving you access to clean, structured outputs that are immediately usable within your organization’s internal tools.

Enhancing Account-Based Sales with Real-Time LinkedIn Insights

Sales account-based (ABS) campaigns are focused on a narrow audience (specific organisation) compared to traditional sales approaches which may be viewed as casting a wide net to capture any company within an industry. A significant aspect of ABS is to understand the entire decision-making unit (DMU) for each potential customer. A web crawler can help identify both the primary contact person as well as the influencers, gatekeepers and blockers in an organisation of interest.

By providing real-time insight into target accounts, ABS teams can better assess their fit within those organisations. For example, if your target account lists a series of open positions on LinkedIn, you can view that information in a web crawler application and develop insights to how to scrape linkedin jobs, and to help determine the future strategic direction of the business. By knowing that your target is actively seeking people with technical skills in a particular area, you can create a sales pitch that speaks directly to the issues your potential customer is trying to solve with their hiring.

How LinkedIn Crawlers Identify Buying Intent and Decision-Makers

Finding the “Right Person” at the “Right Time” To sell is a dream of Sales REPEAT. People use various signals to show their intent to buy on LinkedIn. One way that people can signal that they are interested in buying from you is by having other companies follow them. However, these signals may not always mean that the person has the power or authority to buy.

When it comes to decision-makers, it is often not just about what their title says, but also by seeing the seniority and length of employment experience of employees in the same company, a Crawler can be used as additional information to find out about the hiring authority. So, by analyzing the experience and skill sections of employees, a Crawler will give you the information that you need to find out who the true decision-makers are at each company so that you can concentrate on targeting the right people when trying to close a deal.

Scaling Sales Research Without Scaling Headcount

Automation through automated tools allows businesses to grow their lead generation quickly. In the past, if an organization needed to double the amount of research output needed it would have had to hire double the number of employees. With the use of a configured LinkedIn crawler, organizations are able to scale up their research capabilities indefinitely without having to add any additional personnel.

The research capabilities of LinkedIn crawlers goes beyond just collecting names; they facilitate the extensive research necessary for developing “warm” outreach campaigns. Crawler programs will not only collect the names of prospects but also collect additional information on their followers, including social interactions, volunteerism, society memberships, and online behaviors.

This information can then be utilized by organizations to create “warm” outreach campaigns that will catch the attention of a busy prospect’s email inbox. The ability to create “warm” outreach campaigns at such a scale is a distinguishing feature of the modern data-focused sales organizations versus those of the past.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

Data Accuracy, Freshness, and Compliance in LinkedIn Crawling

One common mistake companies make in their sales intelligence efforts is relying on data that becomes outdated or ‘decayed’. On average, B2B data is estimated to lose nearly 30% of its value every year! Because of this high rate of decay, B2B ‘freshness’ is an extremely important metric.

Crawler technology solves this problem by crawling through key accounts and profiles at regular time intervals to ensure that you only see the most recent, accurate data available. That’s how you make sure that your sales team is always using the most current information while working with your CRM.

However, with great data comes great responsibility. Compliance with data protection laws like GDPR and CCPA is paramount. Professional crawlers and APIs are designed to respect privacy settings and public data availability. When organizations understand python web scraping linkedin best practices, they ensure they are collecting data in a way that is ethical and compliant with platform terms of service, protecting the company from legal and reputational risks.

Integrating LinkedIn-Crawled Data into CRM and Sales Tools

Data needs to be accessible to be useful. The last piece of an effective strategy for crawling is how to get the extracted information into your Customer Relationship Management (CRM) system, such as Salesforce or HubSpot, seamlessly. You can build a LinkedIn data extraction using python and to create an automatically loading or syncing process that sends information about new leads and changed profile information directly to your sales team’s dashboards.

New and modern methods of integration for CRMs involve completing data enrichment as part of the integration. When a new lead is created within the CRM system, the integration will fill in the gaps, including the company size, industry, LinkedIn profile URL, and career history of the lead automatically, thereby providing the sales representative with a comprehensive view of the prospect’s situation at all times. Capturing and managing data this way enables an organization to maintain a single source of truth regarding their data in the CRM System.

Advanced Techniques: Mapping Corporate Hierarchies

A great example of this might be that CROs and Vice Presidents may have similar needs for their departments as their counterparts in other regions. Understanding the chain of command will allow the sales team to understand how to navigate the internal politics of an account.

In summary, a crawler can assist sales professionals in identifying individuals within an organization that they would like to reach out to as potential customers and properly understand the hierarchy of the company.

Competitive Intelligence: Monitoring the Move of Talent

Monitoring employee turnover at competing organizations helps reveal the culture of the organization. Employee profile data collected through analyzing former employees (i.e., alumni) of competitors delivers insights into the competition’s employee turnover rates, what talent they are trying to recruit, and where they will likely focus their product strategy moving forward. For example, if a competitor suddenly increases employee count in a new market, it may indicate plans for expansion into that area.

Analyzing your competition’s “alumni” also delivers valuable insights to your organization. When an employee departs from a competitor and moves to a different organization, that employee is an ideal lead for your organization as that employee already knows the value proposition of the industry. You can utilize technology (e.g., crawlers) to identify the specific account where a former employee works at a given time just as the former employee is starting their new position.

Predictive Analysis: Forecasting Sales Trends with Crawled Data

The Next Evolution in Sales Intelligence Will Be Predictive Rather Than Reactive. Data scientists will leverage months of historical data collected via an automated crawler to reveal patterns leading up to a sale. As an example, if you’re in an industry whose companies usually buy your software offering three months after hiring a new VP of Innovation, you could use this insight to prepare for future opportunities.

Instead of waiting until after hearing the news that a new VP has been hired, you can start preparing your outreach strategy as soon as the position is posted. By taking this approach, you will shorten your sales cycle and increase your likelihood of winning a deal because you will often be the first vendor engaged.

The Future of Sales Intelligence: AI, Crawlers, and Predictive Insights

The future of sales intelligence will be merged with artificial intelligence and web crawling. We will experience a time when AI-powered crawlers not only scrape text from webpages; they also will understand the context and sentiment behind a user’s online profile. An AI crawler could generate a summary of all of the insights on a potential buyer’s professional philosophy based on the various posts and comments made by that individual. This summary would provide a salesperson with guidance on the tone and content of their conversation with that particular prospect.

As machine learning models continue to become more integrated with the tools that assist in extracting data from web pages, those two functions will be less of a distinction between “searching data” and “insights from searching data.” Thus, we will find ourselves transitioning into “autonomous prospecting” like sales databases that identify potential buyers, conduct research, and create hyper-personalized outreach sequences (for the sales rep to approve) based upon the information about a potential buyer that is already obtained.

Automated Sales Intelligence (ASI) is now more important than ever for companies to become successful as they compete against one another. Companies are using LinkedIn’s API and Software Development Kits (SDKs) to collect and use the data available on LinkedIn to be effective sales tools. By using sophisticated tools like the Magical API and select programming languages such as Python, businesses will be able to collect an enormous amount of information on their prospects.

With this new way of doing business, companies will not only be able to find out who their prospective customers are, but they will also be able to collect valuable sales information about their prospects, leading to the ability to convert leads to customers at a faster rate than ever before.

Common Questions for LinkedIn Crawler

Is using a LinkedIn crawler legal?

Yes, crawling publicly available data is generally legal, but it must be done in compliance with data privacy laws like GDPR and the platform’s terms of service. Using a professional API can help ensure your data collection remains within legal and ethical boundaries.

What is the difference between scraping and crawling?

Scraping typically refers to the extraction of data from a specific page. Crawling is a more complex process where the software “crawls” through a website, following links and navigating multiple pages to gather a broader dataset.

Can I build my own linkedin crawler python script?

Yes, Python is a popular language for this task due to libraries like BeautifulSoup and Selenium. However, maintaining a custom script can be difficult due to LinkedIn’s frequent site updates, which is why many prefer using a dedicated API.

How does a LinkedIn crawler handle private profiles?

Crawlers can only access information that is visible to them. If a profile is set to private or restricted, a standard crawler will not be able to see that data. Most sales-focused crawling is done on public-facing or network-accessible data.

How often should I crawl my target accounts?

This depends on your sales cycle. For fast-moving industries, a weekly update might be necessary. For more stable enterprise markets, a monthly refresh of your CRM data is usually sufficient to maintain accuracy.