In the digital age, the saying “data is the new oil” implies that the internet is the world’s largest and most dynamic oil field. For organizations and researchers, being able to extract and refine this resource (to collect, code, and analyze web data) is not merely a luxury; it is a fundamental driver of innovation and competitive advantage.

Given the amount and velocity of data, any manual collection of that information would be simply infeasible! This is where the top web scraping software for data analysis enters as a core part of the modern analyst’s toolkit, essentially the equipment that processes the web’s disorganized chaos into structured and actionable insights.

Table of Contents

Why Web Scraping Software Matters for Data Analysis

Essentially, web scraping is the automation of extracting data from websites. However, web scraping goes beyond automation. With the right web scraping tools, organizations can establish reliable data pipelines that drive everything from operations on a daily basis, to long-term planning. Without these tools, organizations are effectively operating blindly, missing potentially critical signals from their target markets.

Imagine being able to monitor every price change from your top five competitors in real-time and automatically incorporate that into your pricing strategy.

Alternatively, imagine generating leads pointing to highly qualified individuals by extracting contact information from a public man’s professional networking resources. This is not science fiction; it is practical application of web scraping software that is available today. This technology is vital for:

- Competitive Intelligence: Gathering insights on rival offerings, pricing, promotional efforts, and reviews in an effort to put ourselves on a solid footing with a 360-degree view of the market ecosystem.

- Market Research: Aggregating customer sentiment across the internet (forums and social media), tracking market trends, and pinpointing consumer needs ahead of mainstream demand.

- Lead Generation: Creating hyper-targeted prospect lists. For example, sales teams can utilize tools such as a Linkedin Profile Scraper to identify potential organizations and clients, based on job title, industry, size, etc. It’s incredibly more in-depth than just searching.

- Financial Analysis: Gathering real-time data from financial news sites, stock markets and SEC filings to inform trading algorithms and investment models.

Ultimately, these tools are a cornerstone of modern web scraping tools for business intelligence, allowing companies to make decisions based on comprehensive, timely data rather than intuition alone.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

Key Features to Look for in Web Scraping Tools

The market for scraping software can be overwhelming, so focus on the elements that matter. One tool that rises to the top is MagicalAPI and its great LinkedIn Profile Scraper. MagicalAPI fulfills all the requirements:

- Easy to Use + Powerful: MagicalAPI is a linkedin scraping api which is simple to use, with clear documentation, for any technical user as well as non-technical users.

- Scalable: Whether you need a few hundred records or millions, since it is cloud based, MagicalAPI allows for large-scale extractions that won’t part your current machine or strain its capabilities.

- Reliable: Forget captchas, proxy, issues. MagicalAPI is built on anti-blocking infrastructure together with programs like Cloudflare Startup and Microsoft for Startups.

- All Inclusive Data: The scraper will collect all the data you need like names, job titles, experience, education, certificates and projects with clean, structured JOSN data to integrate as you like.

- Integration into Existing Workflow: The data that is extracted by a Scraper (either web scraper or a Linkedin Company Scraper) can seamlessly integrate with CRMs, SQL databases, Google Sheets, or BI tools, allowing you to transition easily from asset collection to analysis.

- Real-Time Freshness: Profiles change frequently, so you will want to make sure you are getting the most recent change any time you capture a profile. MagicalAPI will ensure that happens for you.

- Developer-Friendly: There is a credit-based system, SDKs, and a REST API, which makes it easy and flexible to get up and running with.

All in all, if you want a scalable, reliable, and easy to integrate with workflow web scraping tools interface, the LinkedIn Profile Scraper from MagicalAPI has the strongest offering on the market today.

LinkedIn Company Scraper - Company Data

Discover everything you need to know about LinkedIn Company Scraper , including its features, benefits, and the various options available to streamline data extraction for your business needs.

Understanding Different Types of Web Scraping Solutions

The range of tools for scraping data from the web covers varying needs from individual researchers to large-scale enterprises. In general, three types of data scraping tools can be identified.

No-Code/Low-Code Platforms: The entry point for anyone new to data extraction.

These tools have paved the way for anyone to now be able to scrape data – regardless of coding skill level. No-code and low-code platforms are the best option for marketers, small business owners, and analysts who are running quick proofs-of-concept.

- Octoparse: Widely acknowledged for its intuitive visual workflow, Octoparse allows users to build scrapers at a basic level by clicking on the data elements they need. It’s AI-driven data detection functionality and cloud-based reliance, make this equally powerful and easy to use for users who do not have technology backgrounds.

- ParseHub: ParseHub brings to the table similar abilities to Octoparse in terms of ease of use – even for websites with interactivity that mostly rely on JavaScript. ParseHub possesses functional capabilities in their visual interface that sense features like infinite scroll, dropdowns, and logins – showcasing versatility this kind of tool presents in the contemporary marketplace for web-based applications.

- Import.io: Import.io qualifies as an enterprise solution that allows the user to leverage web data as a service from their existing site(s). Import.io combines no-code extraction with preparation of data and integration functionality that is perfect for businesses that require a consistent high-quality stream of data.

Programmatic Frameworks & Libraries: For Maximum Control and Customization

Developers and data scientists may perceive existing products as inflexible. Tools that allow programming let you fully control the scraping logic. These tools are easily customizable for more sophisticated and complex problem solving.

- Scrapy: Scrapy is a free, open-source framework in Python, and it is the standard for web crawling and scraping projects. It uses an asynchronous architecture, making it incredibly fast and efficient for web scraping at scale. Scrapy has a rich ecosystem of middleware and extensions for addressing practically all computing scraping scenarios.

- Beautiful Soup: It is a Python library that gives you the ability to parse HTML and XML documents. Beautiful Soup is terrific for navigating through the structure of a document and pulling out data. Beautiful Soup does not fetch web pages, but is almost always used to parse the documents it receives back from the web server using the Requests library.

- Puppeteer: Puppeteer is a Node.js library developed by Google. Puppeteer is a high-level API that allows you to control headless Chrome or Chromium browsers. Puppeteer is an excellent choice for scraping a dynamic Javascript-heavy single-page application (SPA) because it replicates user actions and simulated navigation inside the browser.

Specialized Platforms & Services: Managed Solutions for Enterprise-Scale Needs

When data collection is a primary function of your business, dealing with the complexities of the underlying infrastructure can be a hefty burden. Many companies are simplifying this by using some form of Web Scraping as a Service (WSaaS) that reliably handles the headaches surrounding proxies, browser scaling, and anti-bot systems, allowing you to focus on the data alone.

- Apify: Apify is a complete platform for writing, running, and sharing web scrapers (called “Actors”). It has a large library of pre-built scrapers for many of the common sites people want to scrape, and it has the infrastructure for running your own custom code in the cloud. This makes it a great resource for both developers and data analysts.

- Bright Data: As the market leader in web data infrastructure, Bright Data offers a comprehensive toolkit including a large proxy network, visual scraper builder, and out of the box datasets. This is a space for serious projects where reliability, scale, and anonymity are critical.

Common Challenges in Web Scraping (and How Tools Solve Them)

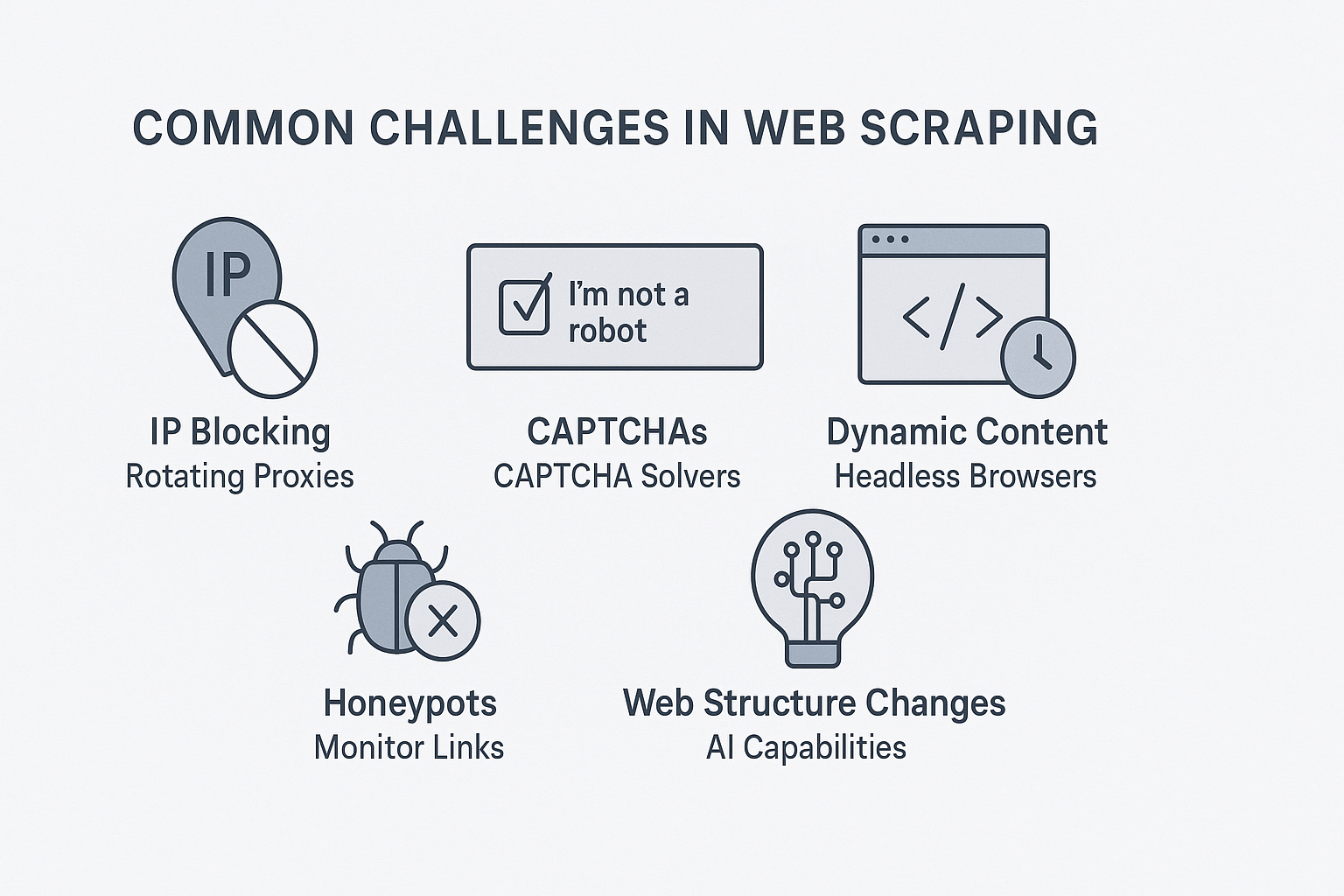

The web can be an unwelcoming place for scrapers. Websites actively implement countermeasures to stop automated traffic. Here are some of the common coping strategies:

- IP Blocking: This is the most common countermeasure. Scraping tools circumvent IP blocking with large pools of rotating proxies that make web requests appear to come from thousands of different users.

- CAPTCHAs: CAPTCHAs (tests designed to tell the user if they are a robot) are designed to stop automated requests. Advanced web scraping services partner with third-party CAPTCHA solvers to resolve this barrier.

- Dynamic Content: Javascript-loaded data will not be present in the initial HTML. Tools with headless browsers (e.g., Puppeteer or Selenium) can fully render the page like a human user in order to access this content.

- Honeypots: Websites occasionally will have invisible links that are solely intended to trap scrapers. The best data extraction tools for analytics

will monitor links for legitimacy and will avoid these pits. - Web Structure Changes: A minor change to the HTML of a website can break a scraper. Many modern web scraping tools are also adding AI capabilities to observe minor layout changes, which may lead to much less maintenance.

Build vs. Buy: The Strategic Choice Between In-House Scraping and Data Providers

As your organization becomes more reliant on web data, you’re faced with a strategic question: do you want to build your own web scraping capability in-house, or do you want to purchase off-the-shelf datasets from a third-party data provider? There isn’t a correct answer to this question. The correct choice depends on your situation, including data uniqueness, scale, budget and internal capacity.

The Case for Building Your Own Scraping Solution (In-House)

Establishing a proprietary web scraping infrastructure provides the ideal level of control and customization. This option involves utilizing programmatic frameworks such as Scrapy or Puppeteer, operated by your company’s developers or data engineers.

- Unmatched Customization: The primary benefit of building is the flexibility to target any public website and extract the precise data points you need, regardless of how niche the data is. If your competitive advantage is based on proprietary data that no one else has, building is likely the only way to obtain your data.

- Complete Control and Customization: You have complete control of the data pipeline. You can quickly update your scrapers when the target website changes, you can change from daily scraping to every five mins, and you can produce the output in any format you need for your internal systems.

- Potential Cost Savings in the Long Term: The cost of managing your development time and operational time can end up being higher upfront, but when the scraping use case is at scale and continuous, the long-term operational costs will be less than a comparable monthly subscription fee to a data provider.

This method is most appropriate for: Companies that have a robust internal technical team, companies whose fundamental business model relies on proprietary web data, and projects that require near real-time information from highly specific sources.

The Case for Buying Data from a Provider (Data as a Service)

Many companies encounter the technical and maintenance issues of web scraping as a significant hurdle. DaaS (Data as a service) vendors assume total control of the scraping and deliver data to you in a clean, structured format.

- Speed and Immediate Value: The quickest way for you to get the data. You avoid the entire cycle of development, testing, and maintenance, and go right to analyzing the data. This can be invaluable for projects needing results quickly.

- Management of Technical Burden and Maintenance: You will not have to wrangle complex proxy networks, solve CAPTCHAs, and deal with the day to day headache of scrapers breaking every time someone changes the site layout. The vendor takes on all of this operational complexity.

- Stable and Transparent Costs: A subscription product simplifies your budget. You know how much you will spend every month or every year on a specific dataset.

- Compliance and Legal Offloading: Authorized data suppliers understand the legal implications around data gathering, and typically by purchasing data, you offload the majority of the compliance and ethical implications of web scraping.

This method is ideal for: Organizations requiring standard industry datasets (e.g., product data from large e-commerce platforms, public company data) and teams with no data engineering support who need trustworthy data quickly.

The Anatomy of a Web Scraping Project: From Goal to Dataset

Executing an effective web scraping project does not merely mean running a tool, but, rather, is a thoughtful and intentional process.

- Identify Your Objective: Be very clear about what data you need (i.e. name of products, costs, reviews).

- Review Your Target Site: You’ll want to understand how the site is built and some structural information. Robots.txt can be viewed by simply typing www.example.com/robots.txt in your web browser.

- Select Your Tool: Based on your goal and the level of complexity of site chosen, go through the list again and select. A simple static blog only needs Beautiful Soup, but an e-commerce site with a lot of data may need Puppeteer, or a no-code tool like ParseHub.

- Create the Scraper: You will need to create the code or design the visual tool to move through pages, find the desired HTML elements, and get the data.

- Run and Supervise: You will run the scraper and keep track of what is happening. Be ready to deal with problems if the layout of the site changes or there are temporary Internet problems.

- Clean and Structure the Data: The formatted data is usually messy, so this is the last and most significant step – cleaning the data (e.g., removing items from prices), structuring it, and placing it in a type that is ready for analysis (like a CSV file or a database).

How Web Scraping Enhances Data Accuracy and Speed

Where web scraping is most effective is in its dual value of speed and accuracy. A market analyst could be assessing the price of 1,000 products from competitor websites; this would take a week of very painful work and likely have copy errors.

A scraper that has been built well, on the other hand, could gather 100,000 prices in less than an hour with full accuracy, and schedule that scraper to refresh the data each day. The speed allows a business to react to market shifts in near real-time and the accuracy ensures that when the business conducts its followup analysis of the data, it is using reliable data.

Ethical Web Scraping: Best Practices for Responsible Data Collection

The phrase “With great power comes great responsibility,” is relevant when scraping content, because being an ethical scraper is critical for both sustainability and being a good neighbor of the web.

- Always Look at the robots.txt File: This file is a file located in a website’s root directory that requests certain parts of the site not to be scrapped by bots. Respecting the rules in this file is the first step in being an ethical scraper.

- Scrape in a Reasonable Manner: Don’t slam a server with hundreds of requests per second. Always add a delay in your request so you do not hurt the website’s performance for human users.

- Identify Your Bot: Add your bot as a user-agent in the header of your scraper. An example is “MyCompany-Market-Research-Bot” so an administrator knows who you are.

- Only Scrape Public Data: Never scrape any data from a website that is behind a login or any PII information unless you have clearly consented from the party.

Integrating Scraped Data into Your Analytics Workflow

Collecting data is only half of the journey. The value of the data is realized when it is all connected into your analytics pipeline. After scrubbing and formatting the scraped data with a library like Python’s Pandas, you can load it into a platform of your choice, like Google BigQuery or Amazon Redshift.

Next, you can connect the data to business intelligence tools, like Tableau, Power BI, or Looker Studio to come up with interactive dashboards and reports that visualize trends and insights that wouldn’t be otherwise available to you.

An example of this type of use case is providing a Linkedin Company Scraper a place to push data to feed a BI dashboard with the competitive growth of headcount over time. Individuals interested in building these types of systems commonly search for how to scrape linkedin data using python and may use the pre-built linkedin scraping API to get more stable and structured access to the data.

Being able to access this type of data directly is how companies cultivate a true data-driven culture. A common use case is to understand labor markets. For example, software developers, looking for a side project will learn how to scrape linkedin jobs to understand the trending skills in a particular labor market.

Conclusion for Top Web Scraping Software for Data Analysis

In an information-heavy environment, the ability to quickly extract and analyze web data is a superpower. From no-code plug-and-play platforms to powerful programmatic tools, the top web scraping software for data analysis offers a way to leverage this information.

When you choose a tool based on where you fall on the technical knowledge spectrum and what you want to work on, you can turn the public web into your own private, organized database. This allows you to move beyond a guessing exercise to building strategies based on a thorough view of real-time data. This can keep you from just keeping up with your competitors and lead to staying multiple steps ahead.

FAQs about Web Scraping Tools

1. Is it legal to take data off the web?

The legal status of web scraping is murky to complicated and may depend on the specific website’s terms of service, the type of data being scraped (public vs. private) and even where you are located. In general however, scraping data from publicly available sources for the purpose of analysis is typically legal. Avoid scraping person data, pay attention to a website’s robots.txt file, and consult with a lawyer before acted if you are unsure.

2. Can web scraping tools scrape dynamic sites?

In general the answer would be yes. Many current web scraping tools, especially those that use a handless browser feature aren’t able to scrape dynamically generated sites with plenty of JavaScript. Draft can work in real time through, or present the work groups, community groups, user groups and repeated users whom is working in this way. The tools are designed only to scrape and don’t necessarily or can’t represent the community working in action.

3. What are some strategies to avoid being blocked while scraping?

There are many methods to lessen the chances of being blocked, and you should look for the right combination below: (1) a pool of premium proxies (residential proxies tend to work better in certain cases); (2) randomize time delay between requests; (3) rotate the user-agent strings to simulate multiple browsers; and (4) also manage sessions and cookies.

4. What is the difference between web scraping and APIs?

An API (Application Programming Interface) is an official, structured endpoint that a website owner provides for programmatic data extraction. You can think of it as the “front door.” Web scraping is the process of retrieving data through the HTML of a web page in contexts when there is no API available. This could be thought of as walking around and reading the sign on the door of the building because there is no front desk to ask. If there is a public API, it will nearly always be a better option due to stability and more reliable access.

5. What is the learning curve needed to learn to use web scraping software?

The learning curve varies widely. For example, if you want to use a no-code visual tool, such as Octoparse, you could expect to be setting up your first scraper within the same day. Alternatively, if you wanted to use a programmatic framework like Scrapy, where you will need to learn a programming language (in this case Python), you would need to expect a much steeper period of learning before being able to successfully develop web scrapers, but the power and flexibility will be far greater in the long term.