Artificial intelligence is no longer just a figment of science fiction. It has emerged as a useful technology transforming industries like healthcare, finance, and more. But what is the secret sauce that fuels these game-changing models? AI training data. Just like a student can’t learn without quality books, an AI model can’t become intelligent without a huge, accurate, and related dataset. The process of teaching the machine to recognize patterns, apply predictions, and complete functional tasks relies on curated information to pull from.

Obtaining and managing that information is one of the largest road bumps in AI development. It is an arduous journey with many challenges that could sink a project. In this article, we will identify the most common challenges of AI training data facing teams and outline actionable strategies and best practices to tackle them.

Table of Contents

Why Training Data Matters for Successful AI Models

AI training data refers to the specific raw data used to train a machine learning model, whether that is text, images, videos, or numbers. The idea is to provide the model with a sufficient number of examples for it to passively learn to recognize patterns and correctly infer the correct choices when it sufficiently sees new data that it hasn’t previously been shown. The adage “garbage in, garbage out” has never been more topical. The quality, relevance, and variety of your training data ultimately dictate the model’s performance, accuracy and fairness in the real world.

In most AI use cases, this data will need to be labeled or annotated, which refers to attaching a specific output to each input. For example, an input image of a cat will be labeled with the tag of “cat.” The model is exposed to millions of these instances and will update it’s internal parameters through an iterative process until it can eventually recognize a cat on its own. A trained dataset that has been pre-processed, typically separated into three sets, training, validation and testing datasets, serves as the foundation for all intelligent AI systems.

Read More : AI Data Integration

Where to Find High-Quality AI Training Data

Before you develop solutions to the problems, it is important to have an understanding of where the data will come from. Finding the right data set is an important first step towards building a strong model.

- Open-Source Sites: There are many machine learning data sets, that exist publicly on sites like Kaggle, Google Dataset Search, and the UCI Machine Learning datasets. These are great sites to start conducting your research and experimentation and many developers begin using free ai training data on these sites.

- Data Vendors: There are many companies that specialize in collecting, cleaning, and labeling data sets for different facets of industry. Although this option is costly, it may save valuable time and typically comes packaged and at high quality.

- Synthetic Data: If there is insufficient real-world data, sensitive data, or the data is imbalanced, you can generate synthetic data. Synthetic data is artificially generated data that mimics the statistical properties of real data. It can be a practical alternative to real data and it can be used to build models without privacy implications.

- Web Scraping: If you are creating your own specific set of data, one viable way is to scrape information that exists publicly on the web. For example, collecting professional information relevant to the industry will require some form of scraping. If you are seeking to create analysis models of a specific market, “How to scrape data from LinkedIn Free” would be a helpful tutorial.

Challenge 1: Data Quality and Accuracy Issues

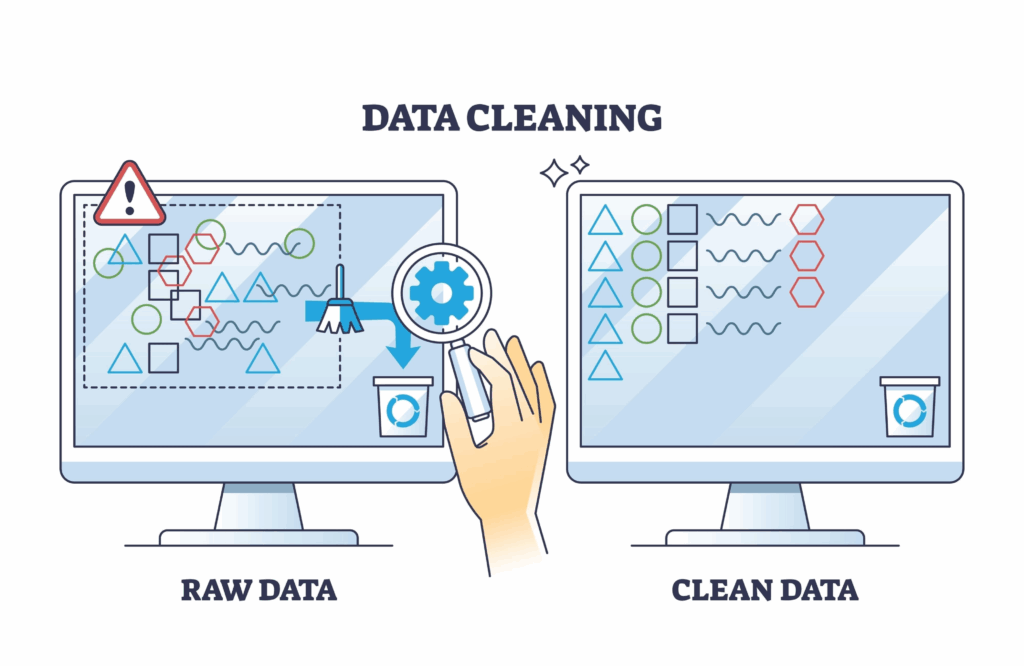

Poor data quality is one of the most common challenges with developing AI, and it can take many forms. Poor data conditions may involve noisy data (i.e., irrelevant data), lack of value, duplicates, or worst detected issue, incorrect labeling. If the model learns from poor data, the resulting predictions will be poor as well. If the AI’s end goal was to predict disease from medical scans, a single mislabeled scan from the training set can lead to compounding issues.

How to overcome: To solve this problem you will have to implement a robust data cleaning and validation process. The first step will be to have automated scripts in place to check for duplicates and blank/ NaN values, and then treat them where possible.

When dealing with labels, a human-in-the-loop model should be seen as the gold standard. That is, same data should be labeled by multiple annotators and then filtered through a consensus curation process. Regularly auditing the data, and using version control to keep a consistent and clean data set over time will also improve data quality.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

Challenge 2: Limited or Imbalanced Datasets

The mantra in machine learning is that the more data, the better. After all, many of the models making waves have been trained on billions of data points. But what happens when you don’t have enough data, or the data you do have is very imbalanced, which is a state of having limited or imbalanced data?

A classic ai training data example is from fraud detection, where fraudulent cases are extremely rare when compared to normal cases; for example 99% non-fraud and 1% fraud. If a model is trained on this imbalanced dataset, it may get very good at predicting “no fraud” or “not fraud” and lose significantly at actually detecting fraud – which is the primary reason for building the.ai based system.

How to overcome It: There are a variety of ways to address it. Data augmentation relies on making minor changes to the existing data points to create new data points. For example, rotating an image or paraphrasing a sentence. Transfer learning often starts with a pre-trained model and you can fine tune the model to your own smaller, specific dataset.

And for imbalanced data, you can address the issue in various ways by oversampling the minority class (i.e. making copies of the rare examples) or downsizing the majority class. The end goal is to create a more balanced dataset to help improve model and classification performance.

The Role of Automation and Scraping in Data Collection

In order to build large AI systems manually collecting data is often impractical, takes too long, and can become cost prohibitive. This is the perfect opportunity for automation, or more accurately, web scraping! If the data is published on a website and the site allows scraping, you can use an automated web-scraping tool or bot to systematically collect a massive & diverse amount of freely available public data on the internet and create unique & comprehensive datasets for your particular AI project on your own terms.

As an example, a company looking to build a B2B marketing AI could build a Linkedin Profile Scraper to collect data on people who work in specific jobs in specified industries, or they could leverage a Linkedin Company Scraper to collect data on potential business partners they can do business with. Compared to collecting the data manually, automated scraping solutions can collect the required data at an unimaginable scale.

Further, if you leverage a good linkedin scraping api or scraping framework that has been built for Linkedin, they can organize the data at the time of scraping so that you are collecting data in a structured format that is ready for final processing and eventual ai training data. This approach saves time and cost in the data collection activity, and it also allows for the eventual build of a highly specialized and robust AI model.

Challenge 3: Data Privacy and Compliance Concerns

With increased awareness regarding data privacy, managing personal data represents a new reality for practitioners. The emergence of new regulations worldwide, including GDPR in Europe and CCPA in California, has come with strict requirements regarding how you collect, store and use personal data. Failing to comply with these laws can lead to substantial fines along with potential serious harm to your company. When using AI training data, you must remain vigilant in protecting user privacy and complying with legal frameworks.

How to address it: the first matter of business is to take a “privacy by design” approach. Use anonymized or pseudonymized data wherever you can. This means for instance, removing Personally Identifiable Information (PII) or replacing it with non-identifying tokens. In any case, you will have to obtain proper legal bases to process data, such user consent.

For particularly sensitive projects, you may wish to consider using federated learning, a type of learning where the model is trained on data locally on the users’ device, and where the raw data never leaves the device itself.

Challenge 4: High Costs of Collecting and Labeling Data

As you can see, preparing high quality datasets is an expensive enterprise. Costs can break down into a number of categories:

- Acquisition: sourcing or purchasing raw data

- Storage: hosting terabytes or even petabytes

- Annotation: paying human labourers for their time (usually where much of the cost is incurred); and the associated computational requirements necessary to extract features and train models.

Regardless of the category, the cost may become a hurdle, particularly at the startup level or among smaller entities.

How do we overcome the cost?: To control for cost, a multi-faceted approach can be employed. Use a combination of open-source and proprietary datasets. For labeling, you can use crowdsource mechanisms such as Amazon Mechanical Turk (but with realism regarding quality control).

But, you can use active learning strategies (where the model decides what instances are most ambiguous or perhaps most informative) to identify where to prioritize human effort for the labels effectively, thereby reducing the amount of overall effort. Finally, simultaneous advances in unsupervised and semi-supervised learning (which require less labeled data) will make it possible to overcome costs even further.

LinkedIn Company Scraper - Company Data

Discover everything you need to know about LinkedIn Company Scraper , including its features, benefits, and the various options available to streamline data extraction for your business needs.

Challenge 5: Scalability and Data Management Problems

As your AI project expands, so too the data. With large datasets come greater logistical and technical challenges. Without a good system in place you run the risk of dealing with data versioning (tracking what changes are made to your dataset), storage constraints, and inefficient processing pipelines. It is an unfortunate fact that it is critical to understand which dataset version was used to train which model version (to ensure reproducibility and debugging) – and it becomes incredibly difficult at scale.

How to Address It: Developing a good data management plan is essential. Use a cloud-based storage solution such as Amazon S3 or Google Cloud Storage as they are designed for scale and durability. Use a dataset versioning management system to track every change to your datasets in a manner similar to that of Git users to track changes in code.

Data version control tools such as DVC (Data Version Control) are examples. Lastly, build an automated data pipeline by which data goes through the entire workflow from data ingestion, cleaning, and preprocessing to being input into the model, ensuring consistency and efficiency.

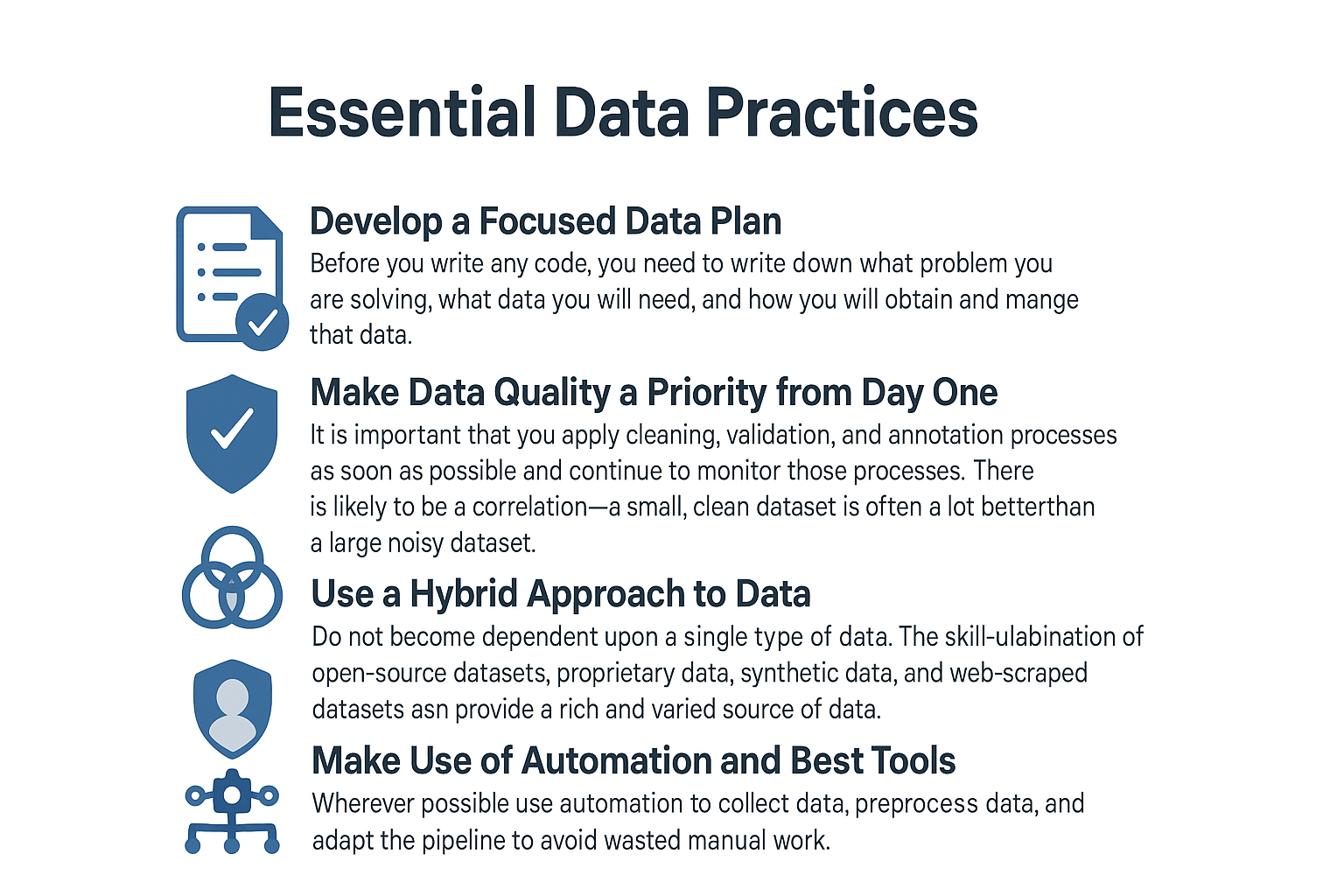

Best Practices to Overcome AI Training Data Challenges

Training data is not about the magic recipe, it’s about a well-researched and thoughtful plan. A proactive way to approach this can turn your biggest bottleneck, data, into your biggest asset. Rather than waiting for problems, you can take action before having any issues, and develop a framework that ensures quality, compliance, and efficiency from the beginning. Here are some best practices for you to follow in planning your data.

First, develop a plan for your data prior to you doing any work. The most successful ai training data projects started not writing code, but a data blueprint. This is a foundational document that describes the problem that you are trying to solve with your model, what types of data (and what features of that data) you need, and the way that you will realistically source the data.

Will you leverage open-source datasets, purchase proprietary datasets, create synthetic datasets, or scrape data off of another public site? The plan should map out your data lifecycle; your process from collection, to storage to cleaning, to versioning and finally archiving your datasets, so that you are intentional in your decisions.

Then, respect the quality of data from day one. Many teams have a bad habit of thinking that “more data is better”, but a small, perfectly clean dataset is nearly always better than a large dataset with lots of noise. Data quality must be a part of your workflow that you don’t compromise on. This can include: automated validation checks as the data is ingested to close the door on errors early, making simple, clear, and repeatable guidelines for your annotators to use, and creating a continuous loop of feedback.

When your model fails, you should then be able to trace that failure back to its source, whether that is an incorrectly labeled example or a gap in what is represented in your dataset. Treat your dataset as a product, somewhere in between a process or output, that you are constantly evaluating and refining.

To create a truly strong model, utilize a hybrid data strategy. Sourcing data from one source is dangerous; it could introduce unseen biases and constrain your model’s ability to generalize to real-world issues. Instead, adopt the thinking of a portfolio manager, and treat your data as a portfolio of mixed assets.

You can create a different dataset by mixing in your own proprietary customer data, publicly available machine learning datasets, web data that you scraped to suit your needs, and targeted synthetic data for your edge cases. Weave together a rich, big, and good-quality training set. Using data in this way will lessen the exposure and the weaknesses of any one source of data and will build a stronger and more flexible AI model.

In addition, integrate privacy and ethics thoroughly into your workflow. Within today’s regulatory environment, data privacy can’t be an afterthought; it needs to be integrated into the architecture of your project. This includes being conscientious of, but not solely following, regulations like GDPR. You’ll develop a “privacy by design” way of thinking, which ensures that you’re also active in implementing that turns personal information into non-personal information via techniques like anonymization and pseudonymization.

You should routinely conduct privacy impact assessments and your data governance policies should be transparent and explicit. Building categories of “ethical AI”, starts with building trust with your end users, which starts with the respectful and appropriate use of their data.

Finally, automate and use the best tools, wherever you can. The volume of data in modern datasets is often such that it excludes the possibility of humans being able to manage datasets accurately and without error manually; many will admit defeat. You will need to embrace automation so that you can develop effective and repeatable data pipelines for data related workflows (including data collection, data preprocessing, and data exploration feature engineering).

Implemented the right tools, like Data Version Control (DVC), which enables you to manage your datasets as rigorously as you manage your code to track even minor updates in datasets. Automating at this level and investing the appropriate time into developing the appropriate MLOps (Machine Learning Operations) systems should eliminate unnecessary labour and avoidable manual tasks you carry out; this and automatically chosen software prevent yourself from having wasted time and energy in your project cycles before they speed up beyond the original scale and in more predictable terms.

Future Outlook: Smarter Approaches to AI Training Data

Artificial intelligence is a continually changing field as are data management practices. However, the trend is toward greater efficiency and intelligence. I have seen federated learning increase in popularity due to not taking ownership of data unlike other services with ai training data capabilities and maintaining privacy.

These new examples of federated learning have shown improvement as zero and few-shot learning advancements allow models to perform tasks based on much less training data than previously thought possible. Improvements in synthetic data generation capabilities will allow for incredibly realistic and diverse data and portfolios that can train models in use cases that have no possibility of obtaining real-world data.

These advancements provide a whole new area of possibilities to create even lower barriers to entry to artificial intelligence development as well as automating certain tasks for development and less risk.

Conclusion: AI Araining Data

Regardless of the challenges (quality data, data balance, privacy laws, cost) the success of any model is tied to the quality of training data. While the path can be rocky for machine learning developers, the obstacles are not unbridgeable.

With respect to quality, and what it means to have thoughtful solutions in place for oodles of data challenges, you’ll be able to build the kind of baseline datasets that will set the stage for the next generation of artificial intelligence. Spending time, money, and vision on data, is spending time, and money, and vision on your artificial intelligence success.

Frequently Asked Questions about AI Training Data

1. What is an example of AI training data?

A good example is a spam filter dataset. Typically this will be several thousand, emails that will all be labelled spam or not spam. Once trained the AI will learn the patterns, words and characteristics associated with the spam mails to automatically classify incoming emails of unknown classification.

2. How do I know how much data I need to assign to the AI model?

There is no single answer to that. It is highly dependent on how complex the underlying task is. A basic classification model that is easy could be very successful with as few as a few thousand examples, whereas a very complex model like a large language model (GPT) could require trillions of words. The important thing here is to ensure that you have sufficient diversity in the data to adequately represent the problem space.

3. Can I use FREE AI training data?

Yes, certainly! There are lots of public repos and platforms that allow you to search and find FREE high quality datasets in all sorts of tasks. And, there are popular sites like Kaggle, Google Dataset Search and even several university archives that are fantastic tools for a lot of research and, students and developers to try things with.