During the time of transformation through technology, data is more than an asset; it is the foundation of a competitive advantage. The contemporary business environment is fragmented, with important information trapped in dozens (sometimes hundreds) of systems and applications, such as SaaS apps, cloud databases, on-premise ERPs, and marketing platforms. To unleash the true power of this disparate data, organizations need a single holistic view of data. And that’s where powerful data integration tools come in as the critical bridge that connects and consolidates information flows across the enterprise.

To start, the primary inquiry for many organizations entering this discourse is: what are data integration tools? In the simplest possible terms, these tools are software utilities designed to integrate data from different data sources and bring it together into a coherent, usable format in a target destination (like a data warehouse, data lake, or operational environment).

These utilities are responsible for the different combination activities – which can be complex – of extraction, transformation, and loading (ETL/ELT), in addition to ensuring the data is clean, consistent, and available for reporting and advanced analytics and machine learning on a timely basis. Without an appropriate integration strategy, businesses risk utilizing data that is antiquated, inaccurate, or incomplete; each leading to flawed decision making and operational delays.

This guide will provide a comprehensive overview of the integration technology landscape, enabling you to effectively contemplate these alternatives and make a decision about the best data integration apps for your own businesses needs, whether early stage, startup, or a larger organization.

Table of Contents

The Ultimate Guide to the Best Data Integration Tools

The evolution of data integration has made enormous advances in the last several decades, moving from manual programming and individualized scripts to high-end automated systems. The market now has solutions that meet every requirement, whether batch-based or real-time streaming data.

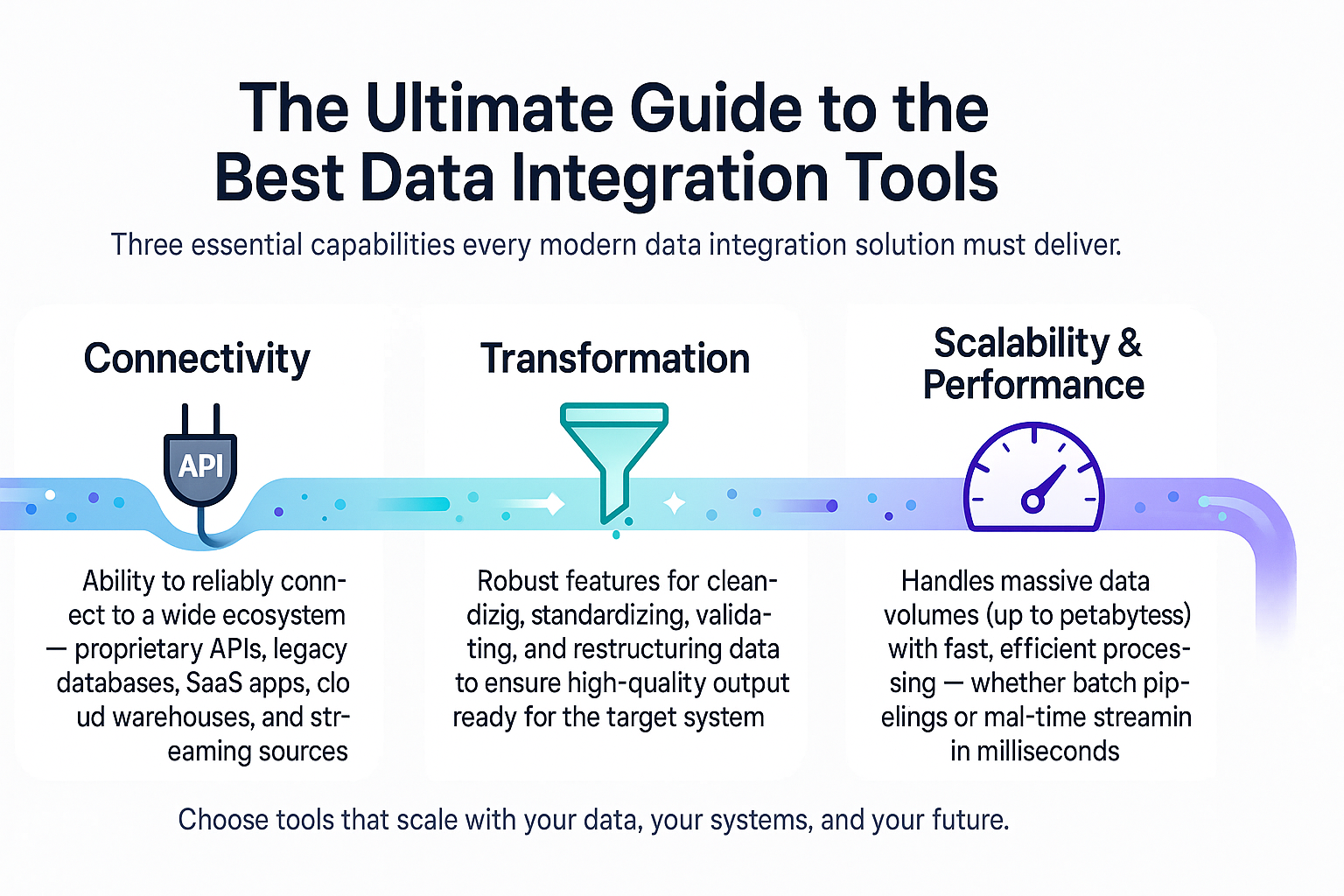

Any quality data integration solution must provide three primary capabilities:

- Connectivity: The ability to conveniently and dependably connect to a broad ecosystem of sources and destinations, including proprietary APIs, legacy databases, and newer cloud services.

- Transformation: A strong suite of capabilities for cleaning, standardizing, and restructuring data to meet the needs of the target system, so that there is a high degree of data quality.

- Scalability & Performance: The ability to smoothly pull and/or push large volumes of data (data integration tools are often working with petabytes of data) and do this as speedily as a scheduled batch or in a matter of milliseconds.

The right platform for you to connect data will depend entirely on your architectural preference for either traditional ETL, modern ELT, or the data virtualization model. Knowing these will lead you in forming a valid data architecture strategy.

Discover How Data Transformation Can Elevate Your Organisation

The Core Architecture: ETL vs. ELT vs. Data Virtualization

The methodology a data integration platform employs determines its suitability for a business’s infrastructure and latency requirements.

1. Extract, Transform, Load (ETL)

ETL is the classic approach that has been in use for decades. Extract from source, transform on a separate staging server for structure and quality, and load to target (in this case, a data warehouse). This approach is preferred when the target system (e.g. a legacy data warehouse) cannot handle transformations in a timely manner or there are strict data quality rules that must be enforced for data to reach its intended destination. ETL tools are often packaged with data quality and a transformation engine that is more complex than other options.

2. Extract, Load, Transform (ELT)

ELT is the contemporary approach, facilitated by the growth of cloud data warehouses such as Snowflake, Google BigQuery, and Amazon Redshift. Raw data is extracted and immediately loaded to the cloud warehouse where it can then be transformed using the cloud warehouse’s compute power through SQL or custom code. ELT is faster for data ingestion, processes semi-structured and unstructured data better, and gives analysts access to raw data for exploratory analysis. Most modern cloud data integration tools are based on the ELT approach.

3. Data Virtualization

Data Virtualization, also referred to as Data Federation, is the non-movement option. In this approach, there is no physical copy of the data; rather it creates a virtual layer which serves as a single access point to provide access to distributed data sources. Data sources are pulled and joined in real-time when a user queries the layer, and at no time is the data brought into a central repository where transformation would take place.

Data virtualization platforms work best in business environments that require real-time insights across live, distributed operational systems. They do not offer the same depth of transformation as either ETL or ELT processes if a company would be looking for historical data analytics.

Top 10 Best Data Integration Tools

To offer a high-value comparison, we have evaluated the top data integration tools across the market, categorizing them based on their primary use case, architecture, and overall enterprise fit.

| Tool | Architecture Focus | Best For | Key Differentiator |

| 1. Fivetran | ELT (Cloud-Native) | Rapid, automated data movement and cloud migration. | Zero-maintenance pipelines; high volume of pre-built connectors. |

| 2. Informatica IDMC | ETL/ELT (Hybrid) | Enterprise-grade, complex, and high-governance data environments. | Market veteran with unparalleled data governance and quality features. |

| 3. Talend Data Fabric | ETL/ELT (Hybrid) | Unified data management, data quality, and big data processing. | Open-source core, comprehensive data quality, and preparation suite. |

| 4. AWS Glue | ELT/ETL (Serverless) | Businesses heavily invested in the Amazon Web Services ecosystem. | Deep integration with S3, Redshift, and other AWS services; serverless. |

| 5. Azure Data Factory (ADF) | ETL/ELT (Cloud) | Businesses in the Microsoft Azure and hybrid cloud environment. | Visual, code-free interface and native integration with Azure Synapse. |

| 6. SnapLogic | iPaaS, ELT | Application integration and connecting cloud-to-cloud/on-premise systems. | AI-powered integration (“Snaps”), visual designer, and strong API management. |

| 7. Hevo Data | ELT (No-Code) | Small to mid-size businesses needing fast, simple setup and maintenance. | Simple, intuitive interface with automated schema management. |

| 8. IBM InfoSphere | ETL (Traditional) | Large enterprises requiring high-performance batch and complex transformations. | Robust parallel processing capabilities for high-volume, on-premise tasks. |

| 9. Airbyte | ELT (Open-Source) | Developers and teams that need extreme flexibility and custom connectors. | Huge open-source connector catalog (600+) and easy customization. |

| 10. Boomi (Dell Boomi) | iPaaS, ELT | Holistic integration, including application, process, and data integration. | Unified low-code platform for API management and workflow automation. |

These platforms represent the spectrum of enterprise needs, from traditional, highly governed ETL (Informatica, IBM) to agile, cloud-native ELT solutions (Fivetran, Hevo, Airbyte). When comparing these systems, look beyond the feature list and match the tool’s core architecture to your organization’s specific data velocity and volume requirements.

Beyond the Basics: Essential Features That Define Enterprise-Grade Data Integration Tools

While extraction and loading are table stakes, truly enterprise-grade solutions distinguish themselves through advanced capabilities focused on governance, speed, and efficiency.

Real-Time and Streaming Capabilities

Contemporary business decisions often require making the data latency measured in seconds instead of hours. The best tools provide CDC, or change data capture, and streaming capabilities that allow data flows to be processed continuously.

For example, the finance department may want an immediate update to their customer payment records, or a logistics company may need shipment tracking to be in real time. Technologies that can support distributed streaming technologies, like Kafka or Spark Streaming, or native data streams, are required for these situations.

Data Governance, Quality, Lineage, Bytes and Best Practices

Every integrated data set’s value is based on the data quality. Enterprise tools must have built-in features for data profiling, cleansing, de-duplication and standardization. There must also be strong data lineage capabilities – being able to follow the data to its source, through every transformation, is very important to demonstrate compliance with regulations like GDPR and HIPAA. This is the basis of data driven business intelligence.

Metadata Management

Positive, well-structured metadata processes manage and provide context and history for all integrated assets, including business metadata (what the data means), technical metadata (schema, source systems, etc.), and operational metadata (e.g., job runtimes, error logs). Through the effective and complete use of this metadata, the most useful data integration tools are better able to capture and automate schema changes, shorten development timelines, and simplify maintenance.

Low-Code/No-Code Development

The rise of citizen integrators is a result of a generation of visual, low-code tools. These visual, drag-and-drop environments enable anyone other than data engineers (like business analysts) to build and manage pipelines with little to no custom code development. This accelerates development time and removes the dependence on a small number of highly specialized technical resources.

Future-Proofing Your Strategy: The Rise of AI Data Integration and Generative Tools

The next frontier for data management is Artificial Intelligence. Questions about what are the best ai tools for data integration? is becoming more central in technology roadmaps. AI is not just a feature, but a layer of intelligence built into the entire integration lifecycle, providing a level of automation and speed never seen before.

AI Data Integration is utilizing machine learning to automate what used to be manual and time-consuming processes:

- Smart Mapping: AI can learn data schemas from different sources and then can recommend or automatically map the data fields, which traditionally takes hours.

- Quality Prediction: ML models can identify anomalies and predict data quality issues before they infect the target system by preceding alert for errors.

- Performance Improvement: AI algorithms can analyze job run times, resource utilization, and data flow patterns to systematically improve data pipeline executions for better cloud computing resource usability.

- Unstructured Data Processing: Advanced NLP (Natural Language Processing) tools allow systems to ingest, digest, and structure data from sources such as customer emails, legal documents, and social media feeds, which conventional tool cannot.

This triggering what is being called the “Intelligent Integration Platforms” (iPaaS) capturing applications and data points quicker and more accurate than has been done before. For businesses that want to take advantage of these new features, the performance ability to process human-readable text is paramount. For example, you could first utilize an How to Use a Web Scraper Chrome Extension for ingesting data, condemning agent, and subsequently utilize AI tools to normalize that data output.

Magical API Integrating Resume Parsing

In specialized areas like Human Resources and Talent Acquisition, challenges related to integrating structured data focus primarily around extracting structured insights from a large volume of unstructured text data – take resumes and professional profiles for example. This is where highly specialized, AI model-based and driven powered API is a critical component of a larger data integration approach.

The magical api Integrating Resume Parsing service is the answer to one of the data integration fields in recruitment that has posed the biggest challenge: how to change resumes that are written and organized (or not organized) in a complex, variable format into cleaned-up, normalized JSON or XML data.

Rather than relying on general ETL or ETL-type tools that may have challenges with the nuances of human-written documents, magical api uses specialized AI models that focus on recognizing and delivering structure using fields such as contact information, work experience, education, and skills.

Magical Resume Parser

Discover the powerful capabilities of the Magical Resume Parser and explore the various options available to streamline your hiring process, optimize candidate selection, and enhance recruitment efficiency.

This is an important process for any organization seeking to automate the processes associated with their Applicant Tracking System (ATS) or Customer Relationship Management (CRM) pipeline. For example, an applicant tracking system could leverage the service for a Resume Parser capability that can convert a PDF or Word document into structured fields ready for ingestion into the database automatically.

In parallel, the utilization of a Linkedin Profile Scraper and a Linkedin Company Scraper can also help recruitment, sales, or market research teams enrich their internal data records with publicly available professional and corporate intelligence. This narrow API-oriented integration is typically easier, faster, and more efficient for this type of structured data than trying to configure a monolithic platform despite the normative features of such platforms. The structured output can be easily ingested through any of the key data integration tools we reference below to finalize the intake and further process data as an analytics requirement.

Comparing the Best Data Integration Platforms for Modern Businesses

Selecting the best data integration tool requires a thoughtful assessment of key factors, including your organization’s budget, technical skill levels, and existing technology stack. As a starting point, we had the vendor comparison table above. However, you must think through the criteria rigorously:

For the Enterprise with Legacy Systems and Complex Needs

Informatica IDMC and IBM InfoSphere DataStage continue to be the leaders here. They deliver unparalleled reliability, support highly complex transformations, and provide the governance controls necessary in heavily regulated industries like finance and health care. They are typically higher cost and require specialized technical staff, but they deliver very high levels of performance in terms of mission-critical high volume batch jobs.

For the Cloud-Friendly, Fast-Pivot Organization and ELT Focused

Fivetran, Hevo Data, and Airbyte are the leaders in this ELT revolution. Their overall focus is on high levels of speed, automation, and low-maintainability. Fivetran is known for its “zero-maintenance” mentality and automates changes to your schemas and resilience of the pipeline. Being open-source, Airbyte appeals to data engineering teams that want full, hands-on control of the feed, while also being able to easily build connections in a full collection of source options like an internal Linkedin Profile Scraper utility for Niche source connections.

For Integrated Process Automation (iPaaS)

When the goal shifts to not only integrating data but automating business processes across several applications, SnapLogic and Boomi lead the pack. For example, connecting a new lead created in Salesforce (SaaS A) to an accounting system (SaaS B) and launching a communication workflow (SaaS C). These platforms tend to offer superior API and workflow management functionality along with their data integration capabilities.

When working with sensitive candidate data, companies should realize the necessity of a focused and robust Resume Parser. To integrate this capability, it is almost always more advantageous to leverage a dedicated API instead of constructing the parsing capabilities as logic within an ETL platform, regardless of how capable the ETL product is. This hybrid approach (a general-purpose platform for the majority of data movement and APIs for targeted or complex data types) is rapidly becoming the pragmatic standard for modern data architecture.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

Conclusion: Data Integration Tools

Deciding on appropriate data integration tools is a critical decision that impacts the ability for any modern business to be competitive. The market has moved well beyond data transfer; today’s leading platforms provide automation, intelligence, and flexibility, to accommodate the required elements of real-time analytics and complex digital ecosystems.

Whether you prefer the governance-driven structure of a traditional ETL giant, the automated and agile approach from a cloud-native provider with ELT data integration, or the focused power of using something like magical api to Integrate Resume Parsing, the focal point is the same: unite.

These tools will break down that siloed data and create a single source for accurate truth allowing the business to create deeper insights, streamline operations, activate incredible data-driven strategy, and drive business impact. Invest in the appropriate platform that supports your scale, architecture, and future-state ambitions, and give yourself the potential for your data to be a legitimate, sustainable competitive advantage in your marketplace.

FAQs about top data integration tools

1. What is the main distinction between ETL and ELT?

The primary difference is in the transformation phase. In an ETL (Extract, Transform Load) process, the data is transformed before it is brought into the target warehouse. In ELT (Extract, Load, Transform), data is ingested in its raw format into the target (usually a cloud data warehouse), and the transformation happens after the data has been committed to the warehouse, leveraging the higher compute power of the target. ELT is generally faster to ingest and is better suited for big data and unstructured data.

2. How do data integration tools help data governance and compliance?

Modern tools promote governance by way of features such as data masking, encryption, role-based access control, and detailed data lineage tracking. Data lineage tracking shows where data originated from and how it was transformed, which is important for compliance with regulation such as GDPR, CCPA, HIPAA, etc. They also facilitate integration with niche tools which govern the data lifecycle of data coming from sources like a Linkedin Company Scraper.

3. Is open-source data integration a practical alternative for larger organizations?

Indeed. Within large organizations, open-source tools, such as Apache Airflow and Airbyte continue to be adopted. They do typically require more internal engineering resources to set up, maintain and secure than paid SaaS solutions, however, they allow for much more flexibility and customization and, offer greater cost savings- particularly for data that is proprietary or specialized.

4. What part does an API play in data integration?

APIs (Application Programming Interfaces) are a key part of the equation. They are the standard interface for extracting data from SaaS apps (e.g. Salesforce or Google Sheets). Data integrations use APIs to connect to sources, but beyond those simple connectors, the specialized API-powered, integrations such as magical api can do things like run a Resume Parser, and then return the structured output and feed it similarly into the data integration’s main pipeline.

5. What should I invest more time in: connectors or advanced features?

The priority should be establishing trustworthy, well-built connectors to your essential data sources first. If a tool cannot connect well to your core ERP or primary database, then there’s no value. After connecting to your core source, you can then invest time in advanced features such as data quality, real-time processing, and AI/automation features to make your reliable and trustworthy pipelines faster.