Data drives the digital era: whether it’s for market research, competitive analysis, price monitoring, or generating leads, anyone who can extract relevant information from the internet has developed a valuable skill.

While many business users, marketers, and analysts might think the term “web scraping” involves complex Python scripts, command line interfaces, and developer headaches, what if we told you the power to harvest your own web data is now entirely accessible to anyone, regardless of their coding abilities?

The field of data extraction has transformed. Nowadays, you don’t have to be a programmer to learn how to scrape data from a website efficiently. With a new generation of robust and user-friendly no-code and low-code applications, you can simply point, click, and save the data you need in no time (far less than it would take to write even one line of code).

The purpose of this expanded guide is to be your guide to understanding how to use no-code web scraping in the simplest form. We will cover the simplest methods available, from basic browser extensions to advanced cloud-based platforms, and show you how to transform unstructured web content into a fully clean, usable dataset without writing a line of code. Whether you are the owner of a small business, a marketer, or a researcher, you can easily harness the potential of web data.

Table of Contents

What Is Web Scraping Without Coding?

At its essence, web scraping is the automated extraction of large volumes of data from websites. Traditionally, this has involved a code developer writing specialized scripts to scrape sites that have data, using languages such as Python, PHP, or Node.js. Web scraping without code or no-code web scraping is a transformational approach to web scraping because it eliminates complex programming and instead leverages intuitive, visual interfaces.

You simply click on the element you want to extract to collect the information you need. The no-code tool (often a desktop tool, Chrome extension, or cloud service) automatically creates the code for you and figures out the required logic.

This technology reduces the high technical barrier to entry. You get all the advantages of automated data collection (speed, scalability, and consistency) without needing to know how to code, maintain the tool, or organize infrastructure. It’s all about the focus on the data and the information, not the technology itself.

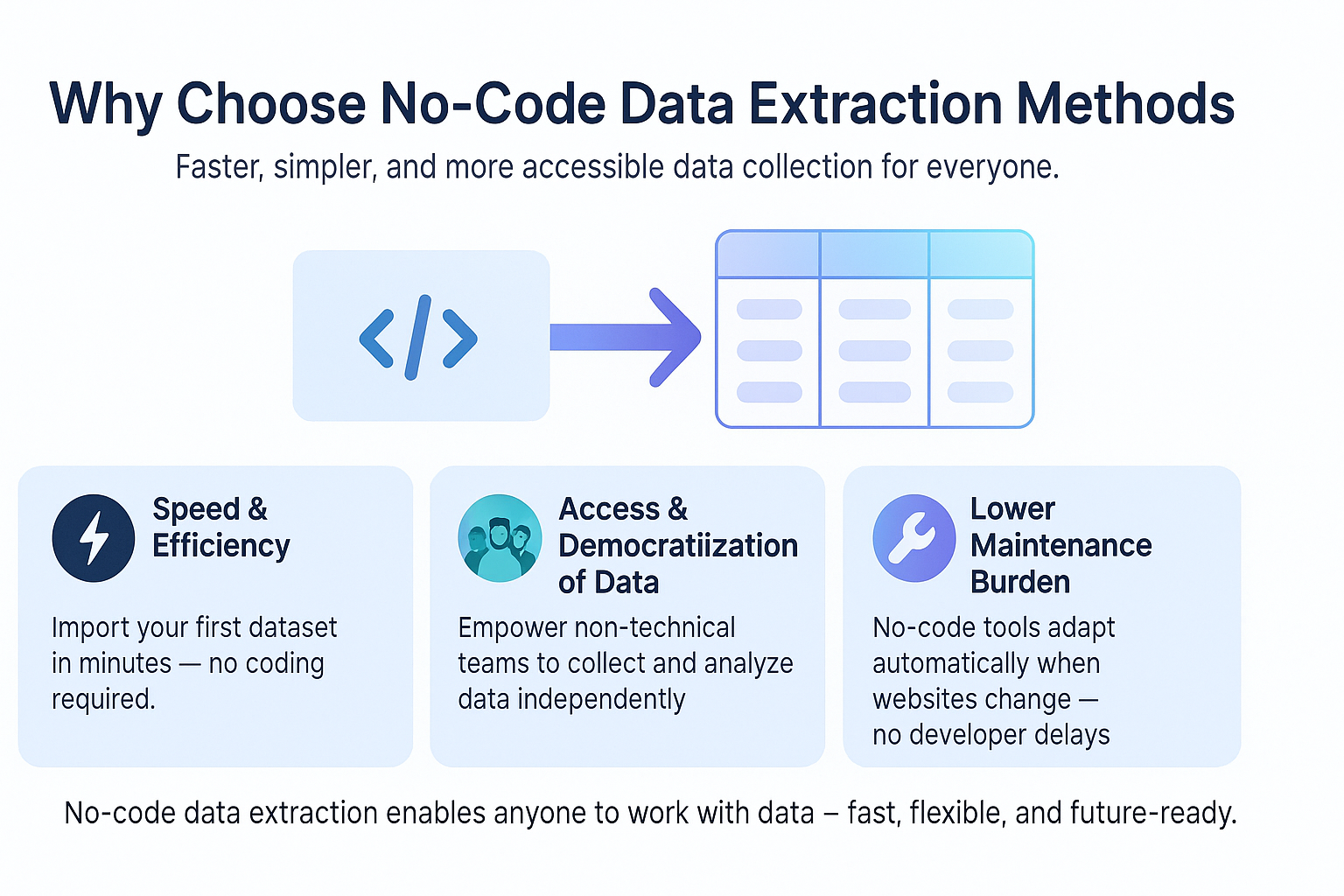

Why Choose No-Code Data Extraction Methods

There are great reasons to select a no-code approach to your data extraction requirements, particularly for teams and individuals who value speed of insights and operational efficiency.

Speed and Efficiency

A coder often requires a lot of time to build, test, and maintain a scraping script. A no-code tool can greatly reduce this amount of time and allow you to import your first dataset within minutes. As you know, speed often translates to market research done faster, quicker price changes, or immediate reactions to competitors.

Access and Democratization of Data

By far the greatest benefit is that anyone can do it. You do not need to hire a developer, nor obtain any sophisticated programming language skills. This allows non-technical teams – marketing, sales, business intelligence, product – to become self-sufficient in their data collection efforts and create a more data-driven culture of accountability throughout the organization.

Envision a marketing team being able to collect data quickly to guide a new campaign, without having to wait for a ticket to the IT department.

Lower Maintenance Burden

Websites modify or change their structure on a constant basis. For a traditional scraper, a small change (a class name being changed, for example) here and there completely breaks a script, and you are waiting for a developer to address that with potential costs and delays. Many of these no-code platforms have developed an approach to be resilient and adaptive or have a process to market to address and insulate you from the routine structural changes of the target website.

Tools and Programming Languages Commonly Used for Web Scraping

Although this guide emphasizes the more straightforward, no-code methods, it’s useful to take a moment to understand the traditional scraping landscapes to provide context as to why no-code tools are so significant.

Traditional (Code-Based) Methods

The gold standard for complex, large-scale, and high customizability scraping projects remains code.

- Python: The clear no-brainer choice. Python’s simplicity and the powerful ecosystem of libraries make it the choice by far. Libraries like Beautiful Soup and Scrapy will parse HTML and help you build scalable web crawlers. You may refer to tutorials called How to scrape data from a website Python, where they refer to this coding-heavy approach.

- JavaScript (Node.js): Great for scraping modern web applications that rely heavily on client-side rendering. Libraries like Puppeteer and Playwright utilize “headless browsers” to execute JavaScript just like a real user/browser.

The No-Code Bridge: APIs and Cloud Services

For users who need the scalability and reliability-consistency of a software development-based solution, but prefer a zero-code interface, Scraping APIs are an ideal compromise. Scraping APIs, like all API powered services, are designed to provide a zero-code interface for an established and proven technical architecture, while handling and abstracting all of the back-end ‘technical complexity’ (IP rotation, captcha-solving, JavaScript-rendering, etc) behind the scenes underneath a simple request to get clean, structured data.

A dedicated scraping API for LinkedIn or other specialized software applications, which we are going to discuss, are all great examples of a very efficient, low-effort method to abstract technical work and get the data you want/research/need.

Best Tools to Scrape Data from a Website Without Coding

- The market is abundant with fantastic tools that allow you to scrape data without writing code, falling within 2 general categories: browser extensions for quick and simple scrapes and desktop/cloud applications for more complex and large-scale projects.

1. Dedicated Desktop and Cloud Scrapers (For Scale and Complexity)

These tools pack in power and options that represent only a fraction of what you could build with a custom-coded solution, but all are provided through a much easier-to-use visual builder.

- Octoparse: A full-featured desktop application with a full visual point-and-click interface. It is particularly well-suited for tricky websites accommodating pagination, infinite scrolling, and logging in. Octoparse has a very generous free tier, akin to a light version of Depot, and from experience, is one of the most popular options for enterprise applications.

- ParseHub: A competing visual scraper that allows you to build complex workflows and establish relationships between data points. It is designed for web pages highly reliant on JavaScript, enabling you to scrape dynamic data that many scrapers struggle to extract.

- Import.io: A similar scraper to Octoparse, which takes a slightly different approach and is focused on turning data into a service. Import.io provides an entire platform to extract, transform, and integrate web data into business processes, particularly useful for teams that manage different types of data.

2. Browser Extensions (For Quick, Single-Page Scrapes)

Extensions allow you to get data from the web extra fast. These are especially useful for smaller projects where you want to grab data points while viewing a webpage.

- Web Scraper (Chrome/Firefox Extension): This is a popular tool that lets you make a “sitemap” (the scraping logic) directly in the browser’s developer tools. This option may require slightly more of a learning curve than other options, but it packs great power.

- Instant Data Scraper: One of the simplest options. Most of the time, you just click the extension icon, and it will automatically find tabular data on the page and give you a simple download.

These tools are essential if you are someone who often wants to scrape data from a website without going through the lengthy process of programming.

Use Magical API for Web Scraping Without Coding

Within the no-code space, API services are the most reliable and simplest way to extract large amounts of data consistently and at scale. Many no-code solutions rely on the front-end browser, but Magical API goes to the source and gives you the reliability of a high-performance project without the technical complexity of actually building. This is where the magic happens for easy data collection.

If you’re a business user trying to collect data from dynamic websites with a lot of resistance (like job boards, social networks, or even e-commerce websites), a visual scraper will eventually fail you. Anti-bot measures, IP blocking, and JavaScript rendering will quickly turn your no-code project into a time-consuming, full-time job. Magical API takes that data collection problem off your plate!

The Power of Magical API: Reliability Meets Simplicity

Magical API is built for reliability and scalability, providing a simple interface to access powerful enterprise scraping infrastructure.

- Guaranteed Anti-Blocking: Say goodbye to proxy lists and CAPTCHA. Magical API features a large, rotating pool of residential and data center proxies along with proprietary anti-bot bypass technologies. You make the request, and our infrastructure takes care of the complex negotiations with the target website so you get the data every time.

- No Maintenance: When a target website changes its HTML, your traditional scraper or visual tool breaks. Magical API’s systems continuously monitor and systematically change the scrapers on our backend. Everything will flow smoothly without interruption, allowing you to focus on analyzing the data instead of fixing your scraping methods.

- Structured Output, instantly: You specify the URL, and Magical API returns clean, ready-to-go data in the format you request (JSON or CSV). No need for tedious post-processing or data cleaning. For instance, whether it is our specialized LinkedIn Profile Scraper or Linkedin Company Scraper, we guarantee clean and structured professional data fields, instantly updating your CRM or database.

- Specialized Scraping Agents: We have specialized, pre-built agents for all of the hardest-to-scrape targets that deliver accurate data, compliance, and easy scraping. This includes the easiest way to execute a LinkedIn scraping api request or collect effective intelligence on scraping LinkedIn jobs. Much less effort than scrolling through the forums to figure out how to scrape data from a website Python while accommodating for LinkedIn!

- Ultimate scalability: With Magical API, you can run one scrape or millions of concurrent requests. Magical API is designed to scale effortlessly in the future. This is honest-to-goodness infrastructure-as-a-service for data extraction, powering you with a workload such as a dedicated engineering team with the concerns of hr and payroll.

No-code visual builders are great ways for learning how to scrape data from a website. But for business-critical, high-volume data use cases, Magical API offers the simplest, reliable, and most powerful approach to actionable intelligence.

LinkedIn Company Scraper - Company Data

Discover everything you need to know about LinkedIn Company Scraper , including its features, benefits, and the various options available to streamline data extraction for your business needs.

How to Scrape Data with Google Sheets (No Coding Needed)

One of the most accessible and often overlooked methods involves leveraging built-in functions within Google Sheets. This method is completely free and requires absolutely zero external software, though it is best suited for simple, static websites.

The Power of IMPORTHTML and IMPORTXML

Google Sheets has two powerful functions designed for data import:

- IMPORTHTML: Used to import data from a table or a list within a website.

- IMPORTXML: Used to import data from any element on a website by using XPath or CSS Selectors (which sound technical, but we’ll show you an easy way to get them).

Step-by-Step: Using IMPORTHTML

This function is the easiest starting point for anyone looking to learn how to scrape data from a website into Excel (via Google Sheets).

The Syntax: =IMPORTHTML(“URL”, “query”, index)

- Identify the Data: Find a website with data organized in a standard HTML table or list.

- Enter the Formula: In an empty cell in Google Sheets, enter the formula.

- “URL”: The full web address of the page.

- “query”: This will be either “table” or “list”.

- index: The numerical order of the table or list on the page (if there are multiple).

Example: If you wanted to extract the first table on a Wikipedia page about countries, your formula might look like this:

=IMPORTHTML(“https://en.wikipedia.org/wiki/List_of_countries_by_population”, “table”, 1)

Google Sheets executes the scrape instantly, and the data populates the cell and the surrounding columns and rows.

Exporting Data to Excel

Once the data is in Google Sheets, getting it into Microsoft Excel is a simple matter of a few clicks: File -> Download -> Microsoft Excel (.xlsx). This completes the goal of knowing how to scrape data from a website into Excel using a free, no-code approach.

Step-by-Step Guide: How to Scrape Data Using Browser Extensions

- Browser extensions offer an easy and fast way to get started with no-code scraping. In the upcoming sections, I will walk you through what the experience is likely to look like with a detailed breakdown of the use of these types of visual scraper extensions.

1. Installation and Activation

- Locate Your Tool: Go to the Chrome Web Store (or Firefox Add-ons) and search for a tool such as “Web Scraper”, “Instant Data Scraper”, or “Simplescraper.”

- Installation: Click “Add to Chrome” (or equivalent).

- Navigation: Navigate to the website you want to scrape.

2. Visually Defining the Data (The Point and Click Magic)

This is the “no-code” magic.

- Open Tool: Simply click the extension icon.

- Start a New Project: This opens a new scraping task.

- Select Elements: The tool will enter a “selection mode.” Click on the first piece of data you want to scrape. Ex: a product title. The tool will usually highlight that element as well as provide details on its type.

- Repeat Process: The tool will automatically look for “similar” elements. Click on a second similar element of data to confirm its selection.

- Name Your Column: Give that column a name (Ex, “Product_Name”).

- Repeat Process: This entire process is repeated for all of the element fields that need to be extracted (Price, Rating, URL, etc.).

3. Handling Multiple Pages (Pagination)

For obtaining data from multiple pages (e.g., a category page listing):

- Identify the Next button: Simply click the Next Page/Load More button using the extension’s selector.

- Designate as a Click or Pagination link: Specify to the tool that this link should be navigated during the data scraping process after scraping the current page. The tool will then loop through all the next pages automatically.

4. Running the Scrape and Exporting Data

- Initiate the run: Start the scraping process. The tool will imitate a user navigating the website while gathering the data in an organized manner, aggregated by the tool.

- Download: At the end of the scraping process, the tool will provide a clean data display, usually in a CSV format, and then you are ready to open it in Excel or Google Sheets and complete your data collection.

Automating Data Collection with No-Code Platforms

For more sophisticated users, or for users who require constantly up-to-date data, no-code platforms elevate scraping into a fully automated process.

Scheduling and Cloud Runs

- Cloud-Based Execution: Dedicated platforms (like Octoparse or ParseHub), in contrast to extensions designed to operate locally using your computer resources, run your scraping jobs on their servers deployed in the cloud. This means that you can turn off your computer or a browser tab and still have the scraping jobs run in the cloud.

- Scheduling: In a dedicated platform, you can specify the scraper to run on an automated and scheduled basis, at intervals that you choose, hourly, daily, or weekly. This is especially useful for scraping dynamic data points such as price or inventory checks.

Integration with Business Systems

Today’s no-code scrapers do more than just produce a CSV. They can connect right into the tools you are already using:

- Databases: Push directly to MySQL, MongoDB, or third-party storage.

- Analytics: Send directly to Google Analytics or Tableau for instant reporting.

- CRMs: For sales-focused data gathering, automatically create leads in Salesforce or HubSpot. As an example, a tool could function as a LinkedIn Profile Scraper and place contact information directly into your CRM with a little advanced preparation. Likewise, a configured LinkedIn Company Scraper could automatically enrich your database with updated information about companies.

This kind of automation dramatically reduces the amount of manual labor the operator must engage in and is sure to provide the latest real data for business decisions.

Understanding the Legal and Ethical Landscape of Web Scraping

Though no-code tools can help with the mechanics of scraping, they do not relieve you of the obligation to scrape responsibly. Knowing the rules will be crucial to a successful and sustainable data strategy.

1. Follow the robots.txt file

Before you scrape anything, be sure to check the target website’s “robots.txt” file (found at [website-url]/robots.txt). This file gives web robots (like your scraper) instructions about which parts of the website they can and cannot crawl. The robots.txt file is not legally enforceable, but following it is a best practice and indicates good intentions.

2. Check the Terms of Service (ToS) for the website

Most commercial websites indicate in their ToS that they prohibit or limit automated access to their site for data extraction purposes. Generally, interpreting the ToS can be complicated and always in flux, but accessing data in violation of it can result in an IP being blocked from accessing the site, and potentially in the most extreme consequences of legal violations. Always read the ToS.

LinkedIn Profile Scraper - Profile Data

Discover everything you need to know about LinkedIn Profile Scraper , including its features, benefits, and the different options available to help you extract valuable professional data efficiently.

3. Data Protection and Privacy Regulations (GDPR, CCPA)

This is the most significant factor, especially if you are trying to obtain individual data points such as emails, names, or job titles.

- Do not scrape personal data or non-public data that cannot be accessed without logging in, unless you are logged in using your own credentials for personal research purposes.

- Be cautious about scraping personally identifiable information (PII). Regulations such as the GDPR (in Europe) and the CCPA (in California) impose strict requirements on how you collect and process PII.

If you are collecting public professional data, such as for a how to scrape linkedin data using python study or service, you must guarantee that your collection and use is compliant with privacy regulations.

Even for professional B2B purposes, it may be wise to instead use a managed LinkedIn scraping api service that has compliance built in. This is true for data points collected by a LinkedIn Scraper as well. The desire to get accurate data on How to Scrape LinkedIn Jobs? may also fall under this category.

4. Do Not Overload the Server

If you are legally permitted to scrape the site, scrape respectfully. Too many requests per second are also a bad practice, because if too many requests are sent quickly (also called aggressive scraping), the scraper may slow the website’s server down, or even crash it.

This is not only considered bad practice, but is also considered a Distributed Denial of Service (DDoS) attack, which is illegal. No-code scraping tools typically include a time-delay option in which users can set delays to manage their requests.

Common Challenges in No-Code Web Scraping and How to Solve Them

While no-code tools are fantastic, they are not magic. They face the same core challenges as code-based scrapers, but often have simpler, built-in solutions.

| Challenge | Description | No-Code Solution |

| Dynamic Content (JavaScript) | Content loads after the page appears, using JavaScript (e.g., infinite scroll, single-page applications). Simple scrapers miss this data. | Headless Browsing/Rendering: All advanced no-code tools include a setting to enable JavaScript rendering. This makes the tool act as a full browser, waiting for all content to load before scraping. |

| IP Blocks & CAPTCHA | If you make too many requests from a single IP, the website can block you or challenge you with a CAPTCHA. | Proxy Rotation: Advanced platforms integrate with proxy networks, automatically routing your requests through different IP addresses to avoid detection. Some sophisticated LinkedIn scraping api or other services offer automatic CAPTCHA solving. |

| Website Structure Changes | The website owner changes the class names or layout, breaking your defined scraping logic. | Visual Reconfiguration: The solution is simpler than in coding; you just need to re-point and re-click the elements in the visual builder to define the new structure. Tools with AI features may even auto-correct minor changes. |

| Login Requirements | The data you need is behind a login screen. | Login Configuration: Most desktop and cloud scrapers have a feature that allows you to configure a login sequence (entering a username, password, and clicking “Sign In”) before the scraping task begins. |

Unlocking New Horizons: Creative Applications for No-Code Web Scraping

The data you collect can fuel projects far beyond simple competitor monitoring. No-code scraping unlocks a vast realm of possibilities for innovation across different fields.

For Marketing and SEO

- Content Gap Analysis: Scrape the titles and meta descriptions of thousands of competing blog posts to identify topics your competitors cover, but you don’t.

- Review Aggregation: Gather customer reviews from many sources such as Google Maps and Yelp (and specific industry sites, if available) in order to conduct sentiment analysis.

- Influencer and Lead Discovery: A Linkedin Profile Scraper can gather publicly accessible information for engagement campaigns so that salesmen can find relevant, high-quality leads. This can also be used to create a list for a LinkedIn Company Scraper so that users can build B2B contact lists.

For E-commerce and Retail

- Real-Time Price Monitoring: Set an automatic scrape to check competitor pricing on important SKUs hourly or daily, so you can price-match in real-time and in real situations!

- Inventory/Availability Checks: Keep an eye on inventory counts of hard-to-find or highly desired products at other retailers.

For Job Seekers and Recruiters

- Hyper-Focused Job Boards: You can easily gather data on how to scrape jobs from LinkedIn and from other job boards so that you can create a hyper-personalized aggregate job board that only shows opportunities in your specific niche criteria, saving you hours of searching.

Final Thoughts: The Easiest Way to Scrape Data Without Programming

The era of data is no longer just for developers. There are now many advanced no-code tools available, from simple browser extensions to robust cloud platforms and sophisticated APIs, that allow everyone to utilize web data.

To learn how to scrape data from a website in today’s context, it is best to select a tool that is right for the job:

- For quick, small, one-off scrapes to: a simple browser extension or Google Sheets’ IMPORTHTML function is the way to go.

- For larger scale, potentially repeat scrapes, or more complex projects: A dedicated cloud platform such as Octoparse or a comprehensive managed API option will give you the power and reliability of scraping but without the coding involved.

You can utilize these options and, in addition to scraping data in ethical manners, such as observing robots.txt and Privacy Laws, learn to use data insights to innovate and improve business solutions without writing a line of code. Data is power. And that power is available to you.

The Future of Accessible Data Intelligence

The barriers to web data acquisition have crumbled. No-code web scraping is not just a trend; it’s the future of accessible data intelligence. By using the visual, point-and-click tools and API services detailed in this guide, you can eliminate the complexity and cost of traditional coding methods.

You are now equipped with the knowledge to efficiently extract the information you need, whether it’s compiling product lists, conducting competitive analysis, or identifying new market opportunities. Start scraping today, and turn the world’s data into your competitive advantage.

FAQs for How to Scrape Data from a Website

Is web scraping without coding legal?

Web scraping itself exists in a legal gray area. It is generally considered legal to scrape publicly available, non-copyrighted data, provided you do not violate the website’s Terms of Service and, most importantly, you respect privacy laws like GDPR and CCPA, especially regarding Personally Identifiable Information (PII). Always check the website’s robots.txt file and ToS.

What’s the difference between a no-code scraper and a scraping API?

A no-code scraper (like Octoparse or a browser extension) is a visual, point-and-click tool where you build the scraping logic yourself. A scraping API is a managed service that handles the technical complexities (proxies, IP rotation, CAPTCHA) on its own server. You simply send the API a URL, and it sends you back clean data; you don’t build the logic yourself, making it often more reliable for hard-to-scrape, dynamic sites.

Can I scrape data from a website that requires a login without coding?

Yes. Many advanced no-code desktop and cloud platforms (like Octoparse or ParseHub) include features that allow you to program the necessary steps to log in (input fields, button clicks) as part of your scraping workflow, enabling you to access the data behind the authentication wall.

Can no-code tools handle dynamic websites that use JavaScript?

The best no-code tools are specifically designed to handle dynamic content. They include a setting for “JavaScript rendering” or “headless browsing,” which instructs the tool to wait for all the content to fully load, just as a human browser would, ensuring the scraper collects all the data.

Can I really export data from a website to Excel without a tool?

For simple, static websites with data structured in HTML tables or lists, you can use the built-in IMPORTHTML function in Google Sheets. Once the data is in your sheet, you can easily download it as a Microsoft Excel file, which serves as the most basic method for how to scrape data from a website into Excel.